Understanding Docker, Containers and Safer Software Delivery

Databases, dependencies, cron jobs … Applications today have so many layers that it isn’t a surprise when moving things takes a lot of time. But it doesn’t have to be that way. Today, you can ship software to virtually any environment, and be up and running in seconds. Enter Docker.

Software delivery

Delivering software used to be easy. The hard part was programming, but once you finished you would just handle the product, maybe fix some bugs, and that’d be all.

Later, with the “LAMP stack” (Linux, Apache, MySQL, PHP) — which was widely supported by hosting companies — things got slightly more complicated, but were still manageable. You could deliver dynamic sites linked to databases and set everything up via control panels.

But in more recent times, the scenario has gotten even more diverse and demanding, as new technologies have broken in. NoSQL databases and Node.js, programming languages like Python and Ruby, have gained in prominence. All of these and more have opened lots of possibilities, but now delivering software is not so easy anymore.

Implementation

Applications have become hard to implement. Even if you get yourself a dedicated server, you still have to deal with installing and setting things up, and even some of the maintenance that’s needed to get everything up and running. And yet, with everything working, given that now you are in complex and tightly coupled systems with different services and programming languages, there’s always the chance that things will break all of a sudden.

Docker to the rescue

Docker makes delivering software easy again. Docker allows you to set up everything — the software you’ve developed, the OS in which it will run, the services that it needs, the modules and back-end tools such as cron jobs. All of it can be set up to run in minutes, with the guarantee that it will work on the target system as well as it works on your development environment.

The Problems Docker Solves

These are some of the issues you’ll come across at some point or another when delivering software:

- The application you carefully developed with your favorite language (Python, Ruby, PHP, C) doesn’t seem to work on the target system, and you can’t quite figure why.

- Everything was working just fine … until someone updated something on the server, and now it doesn’t anymore.

- An otherwise minor dependency (e.g. a module that’s used only occasionally, or a cron job) causes problems when your client uses the software … But it was working just fine on your computer when you tested it!

- A service your product relies on, like a database or a web server, has some problem (e.g. high traffic for a website, or some problematic SQL code) and acts as a bottleneck slowing down the entire system.

- A security breach compromises some component of the system and, as a result, everything goes down.

These issues fall within the somewhat fuzzy territory of “DevOps”, with some of them involving maintenance issues (server updates), some testing issues (checking modules versions), and some deployment issues (installing and setting up everything on a different location). It’s a real pain when deployment of something that’s already working doesn’t go smoothly, instead becoming problematic and time consuming.

Software Containers

You’re probably familiar with those large, standardized shipping containers that exist to simplify delivery around the world — the intermodal container:

You can put pretty much anything in one, ship it anywhere, and at the other end unload what’s there — a car, some furniture, a piano — in exactly the same, original condition.

In software development, we may spend days trying to get things working on a different environment — only for them to fail a couple of days later. It’s easier and faster to ship a working car to a different continent than to deliver software that works reliably. Isn’t that kind of embarrassing?

So people started thinking of something similar to shipping containers for delivering software — something you could use to ship software in a reliable way, that would actually work as expected: software containers.

This might make you thinks of software installers, like those used to easily distribute desktop applications. With an installer, all you can distribute is an executable and some runtime libraries (small programs that the main application needs for running) — as long as these don’t conflict with those that the system has already installed. In contrast, software containers enable us to ship pretty much anything — just as with physical containers.

Examples of what you can put in software containers include:

- a Python, Ruby or PHP interpreter, packed with all of the required modules

- any runtime libraries

- specific versions of certain modules (because you never know when a newer version will cause some problems)

- services your application needs, like a web server or a database

- some specific tweaks for the system

- maintenance back end tools, such as cron jobs and other automation.

Simplified operations

Containers simplify operations dramatically. And they’re so practical, easy to create and easy to handle that there’s no need to put everything into a single one.

You can put the core of your application with the libraries in one container, and call services such as Apache, MySQL or MongoDB, from different containers. This all may sound strange and even complicated, but bear with me and you’ll see how doing so not only makes a lot of sense, but it’s way easier that it sounds.

When to Use Software Containers

Before we get into the mechanics and some details of how it works, we’ll review some use cases. Here are some scenarios that would greatly benefit from using software containers:

- a web application that relies on back-end technologies

- a service (such as web, or database) that needs the be scaled up and down based on demand

- an application (web or otherwise) with a very specific setup (OS, tools, environment variables, etc.)

- a development environment easy to distribute among peers (that is, a quick and easy way to share a certain setup)

- an environment for sandboxing (to test things safely) that can rapidly be created and disposed as many times as needed

- a setup with an effective separation of concerns, with loosely coupled components (apps from services to operating systems) that can be handled independently.

When Not to Use Software Containers

Equivalently, before we all jump into the hype, there are other situations in which containers have little to offer, such as:

- a website that uses only client-side technologies such as HTML, CSS, and JavaScript

- a simple desktop application that can otherwise be distributed with a software installer

- a Windows-based development environment (such as .NET Framework, or VisualBasic runtimes) that cannot be implemented on Linux.

What is Docker?

For those situations in which containers shine, you may be wondering how this technology is implemented in practice. So let’s see look at how Docker delivers on all of these promises.

Docker: “Build, ship, and run any app. Anywhere.”

Docker is an open-source project — as well as a company, based in San Francisco, supporting that project. It was just born in 2013, and yet in so little time and still partly in beta, it’s being massively adopted in a number of industries.

But what is it? Docker is software that you run from the command line and that allows you to automate the deployment of applications inside software containers. From the Docker website:

Docker containers wrap a piece of software in a complete filesystem that contains everything needed to run: code, runtime, system tools, system libraries — anything that can be installed on a server. This guarantees that the software will always run the same, regardless of its environment.

As that may still be a little too abstract, let’s see what running a container is like.

A Docker demo

This is how your run a the “hello-world” container:

$ docker run hello-world

And here’s the output, generated from within the container, with a little description of the Docker internals:

Hello from Docker.

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker Engine CLI client contacted the Docker Engine daemon.

2. The Docker Engine daemon pulled the "hello-world" image from the Docker Hub.

3. The Docker Engine daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker Engine daemon streamed that output to the Docker Engine CLI client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker Hub account:

https://hub.docker.com

For more examples and ideas, visit:

https://docs.docker.com/userguide/

Hopefully that was simple enough, but there isn’t much more we can do with the “hello-world” container, so let’s go further and run an interactive shell in an Ubuntu Linux:

$ docker run -i -t ubuntu bash

# cat /etc/issue

Ubuntu 16.04 LTS \n \l

The first command launches interactive (-i) TTY or console (-t) in the ubuntu container with the bash shell. The second command (cat /etc/issue) is already inside the container (and we could have continued running commands, of course). To be clear: no matter if you’re on Windows, your Mac, or your Debian box, for that container you’re in an Ubuntu machine. And for the record, that console was up and running in a second!

Additionally, since containers are completely isolated and disposable environments, you can do crazy things in them, such as:

# rm -rf /etc

# cat /etc/issue

cat: /etc/issue: No such file or directory

And when you exit or kill that container, all you have to do is relaunch it, and you’ll get a new one on the exact same initial state:

# exit

$ docker run -i -t ubuntu bash

# cat /etc/issue

Ubuntu 16.04 LTS \n \l

It’s that simple!

How Docker Works

The architecture

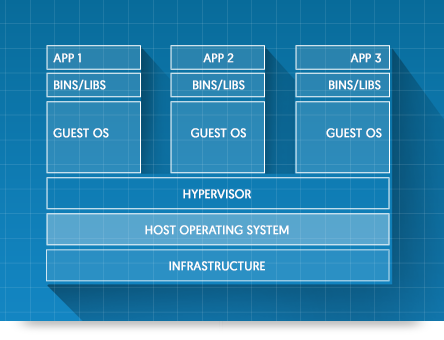

If you’re familiar with virtual machines (VM) such as a hypervisor, you may already have started to notice some differences. While they allow you to run different operating systems (OS), VMs have a heavy memory footprint on the host machine, as every new OS is loaded from scratch into main memory. And since every OS requires all its own binaries and libraries for the entire system, that usually accounts for several extra GBs of space on disk. Last but not least, just as when launching an actual OS, the system takes several minutes to load before it’s operational.

Software containers, on the other hand, dramatically reduce most of this overhead, because they directly use resources from the host OS, which are handled by the Docker Engine, allowing for a more direct and efficient management of resources. That’s why, in just about a 100MB you can have a minimal yet fully working Linux distribution such as Ubuntu that you can launch in literally 1 second.

Images and containers

You’ll hear a lot about “images” and “containers” when working with Docker, so let’s clarify what they are.

An image (sometimes called “the build”) is a file, a read-only resource that you download or create, packed with everything that’s needed for an operational environment. Building images is very easy, because you can use already available images as a base (for example, a Debian distribution), and tell Docker what you want on top of it, like certain development tools, libraries, and even put your own application inside.

A container, on the other hand, is the isolated environment that you get when you run an image, and it is read and write, so you can do whatever you want in them. This environment is going to be in the precise state that was defined when building the image. And since images are read-only, when you run a new container you have a perfect new environment, no matter what you did in other containers. You can run as many simultaneous containers as your system can handle.

So you run containers from images. An analogy that can be useful — if you work with object-oriented programming such as C or Java — is that an image is like a class, whereas a container would be an instance of that class.

Docker workflow

Since this is an introductory article, we won’t get into full details just now, but for you to have an idea of what a typical workflow with Docker looks like, here are the main three steps:

- Build an image using the Dockerfile, a plain text file in which you set the instructions for what you want to bundle in the build — such as base OS, libraries, applications, environment variables and local files. (See the Dockerfile reference for more.)

- Ship the image through the Docker Hub, or your private repository. You can now very easily distribute this application or development environment with Docker — and in fact, there are dozens of official, pre-built images offered by software developers, ready to use. (Explore the Docker Hub for more.)

- Run a container on a host machine. All you need is to have Docker installed in order to be able to run containers, deploy microservices (that is, launching different containers running different services), and have the environment you need for development or deployment.

What to Do Next

The possibilities with software containers are immense, and they provide in many cases definite solutions to what used to be open problems in the area of development and operations (DevOps). We’ll give you here a list of resources to get you started working with Docker and software containers.

The requirements for installing Docker are somewhat high:

- Windows: 64-bit operating system, Windows 7 or higher.

- Mac: OS X 10.8 “Mountain Lion” or newer, with Intel’s hardware support for memory management unit (MMU) virtualization, and at least 4GB of RAM.

- Linux: 64-bit installation (regardless of your Linux distro and version), with a 3.10 kernel or higher. (Older kernels lack some features required to run Docker containers.)

Because Docker is a Linux-based technology, for Windows and Mac you will first need to install the Docker Toolbox that will easily set up a Docker environment on your computer, including a virtual machine running Linux and the Docker Engine. For instructions on the installation and setup, you can refer to the OS specific guides:

For the different Linux distributions, you’ll just install the Docker Engine:

Once you have Docker installed, you can follow this step-by-step walkthrough on running and building your own images, creating a repository on the Docker Hub, and more:

To go further, you’ll find many technology specific Docker tutorials on SitePoint, such as for Ruby, WordPress, and Node.js. (You can also explore all of SitePoint’s Docker offerings.)

Finally, keep in mind that this technology consists of a lot more than a command tool for running containers. Docker is an ecosystem of products and services oriented towards centralizing everything you can possibly do with containers — from creation to distribution, from running on a single machine to orchestration across hundreds or even thousands of servers.