Accelerating the Cloud: What to Expect When Going Cloud Native

This article is Part 4 of Ampere Computing’s Accelerating the Cloud series. You can read them all on SitePoint.

So far in this series, we’ve covered the difference between x86-based and cloud native platforms, and the investment required to take advantage of going cloud native. In this installment, we’ll cover some of the benefits and advantages you can expect to experience as you transition to a cloud native platform.

Benefits and advantages of Cloud Native Processors for cloud computing:

- improved performance per rack and per dollar

- greater predictability and consistency

- increased efficiency

- optimal scalability

- lower operating costs

Peak Performance Achieved with Cloud Native Processors

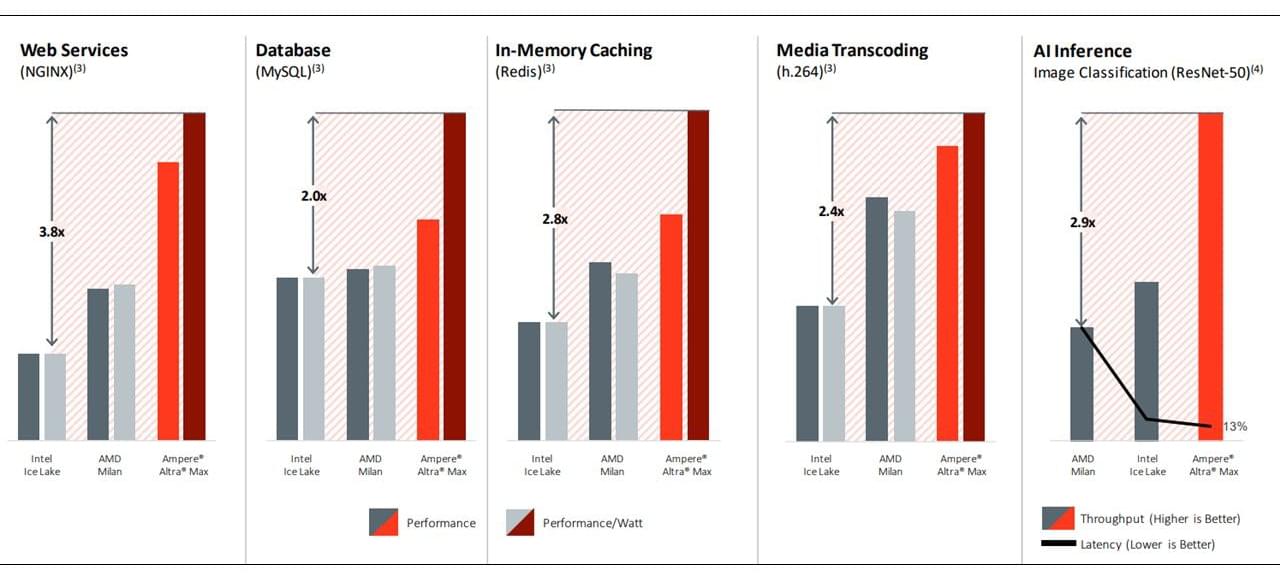

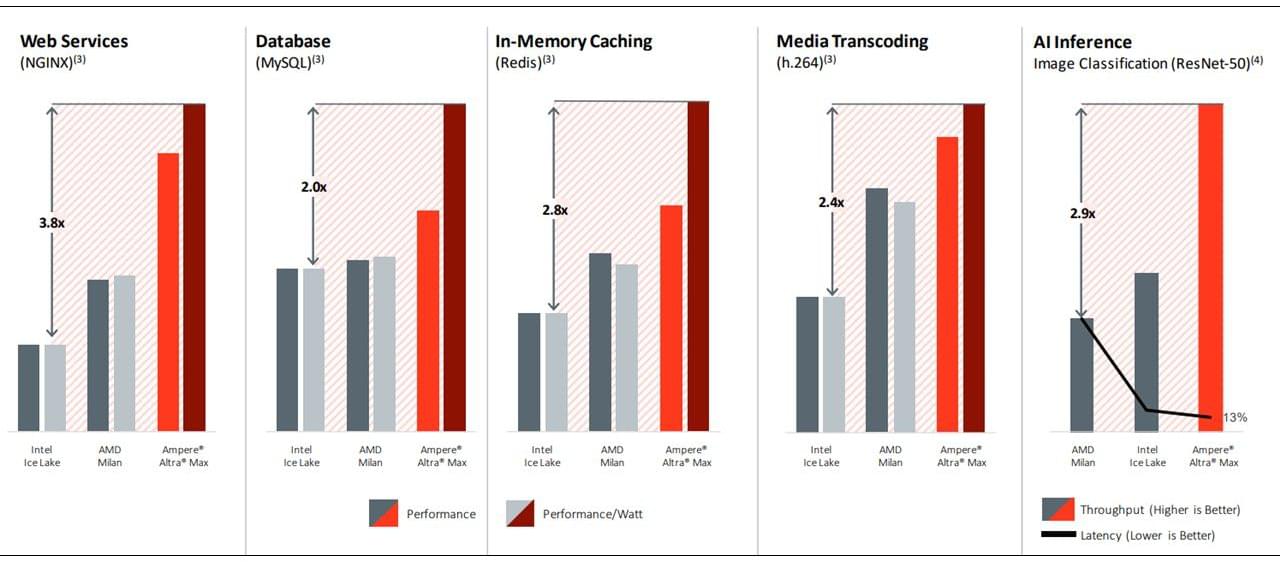

Instead of providing a complex architecture burdened by legacy features like the x86, Ampere Cloud Native Processors have been architected to more efficiently perform common cloud application tasks for popular workloads. This leads to significantly higher performance for the key cloud workloads businesses rely upon most.

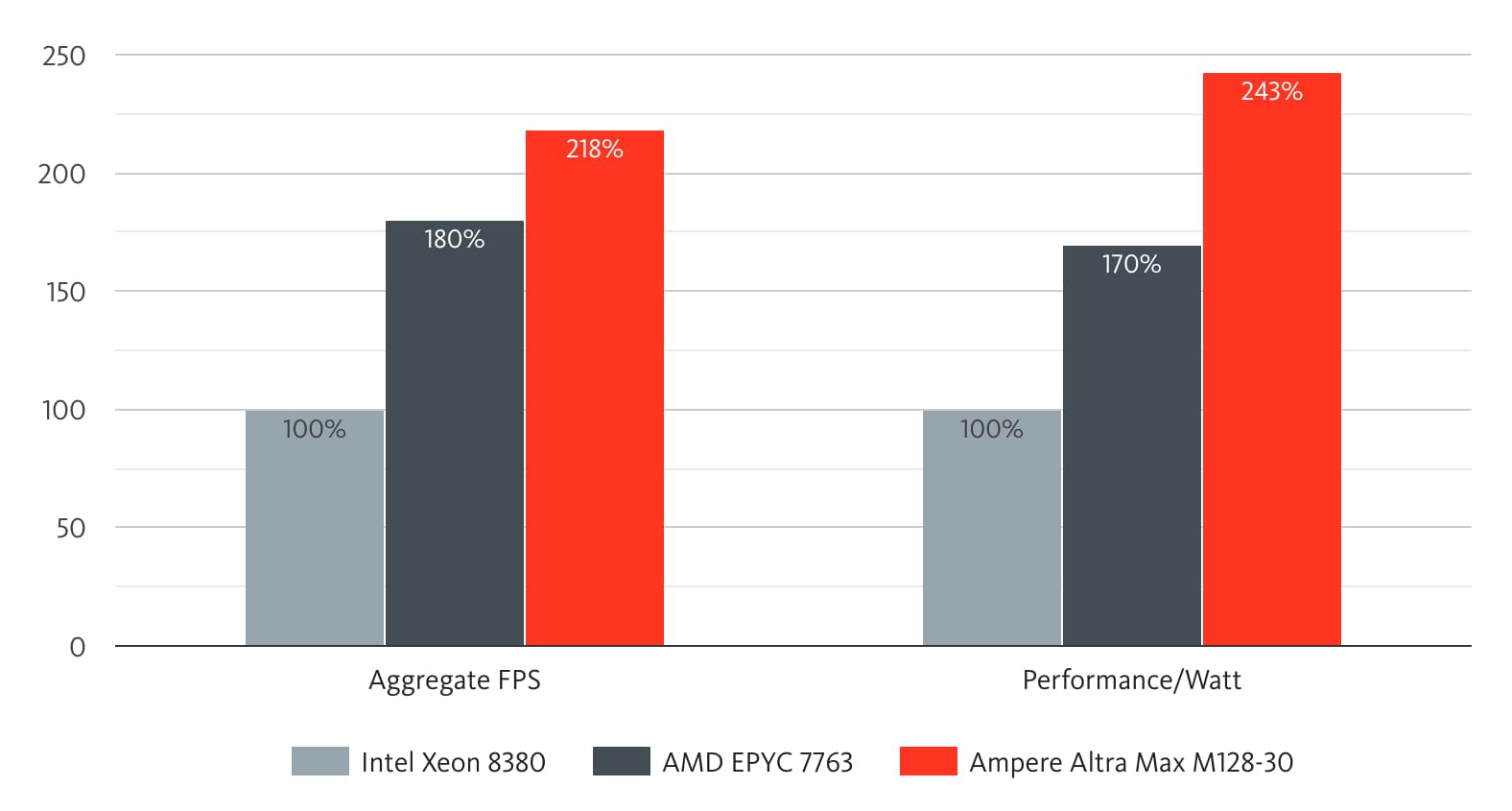

Figure 1: The Ampere cloud native platform delivers significantly higher performance compared to x86 platforms across key cloud workloads. Image from Sustainability at the Core with Cloud Native Processors.

Cloud Native Delivers Greater Responsiveness, Consistency, and Predictability

For applications that provide a web service, response time to user requests is a key metric of performance. Responsiveness depends upon the load and scaling; it’s critical to maintain acceptable response times for end-users as the rate of requests rises.

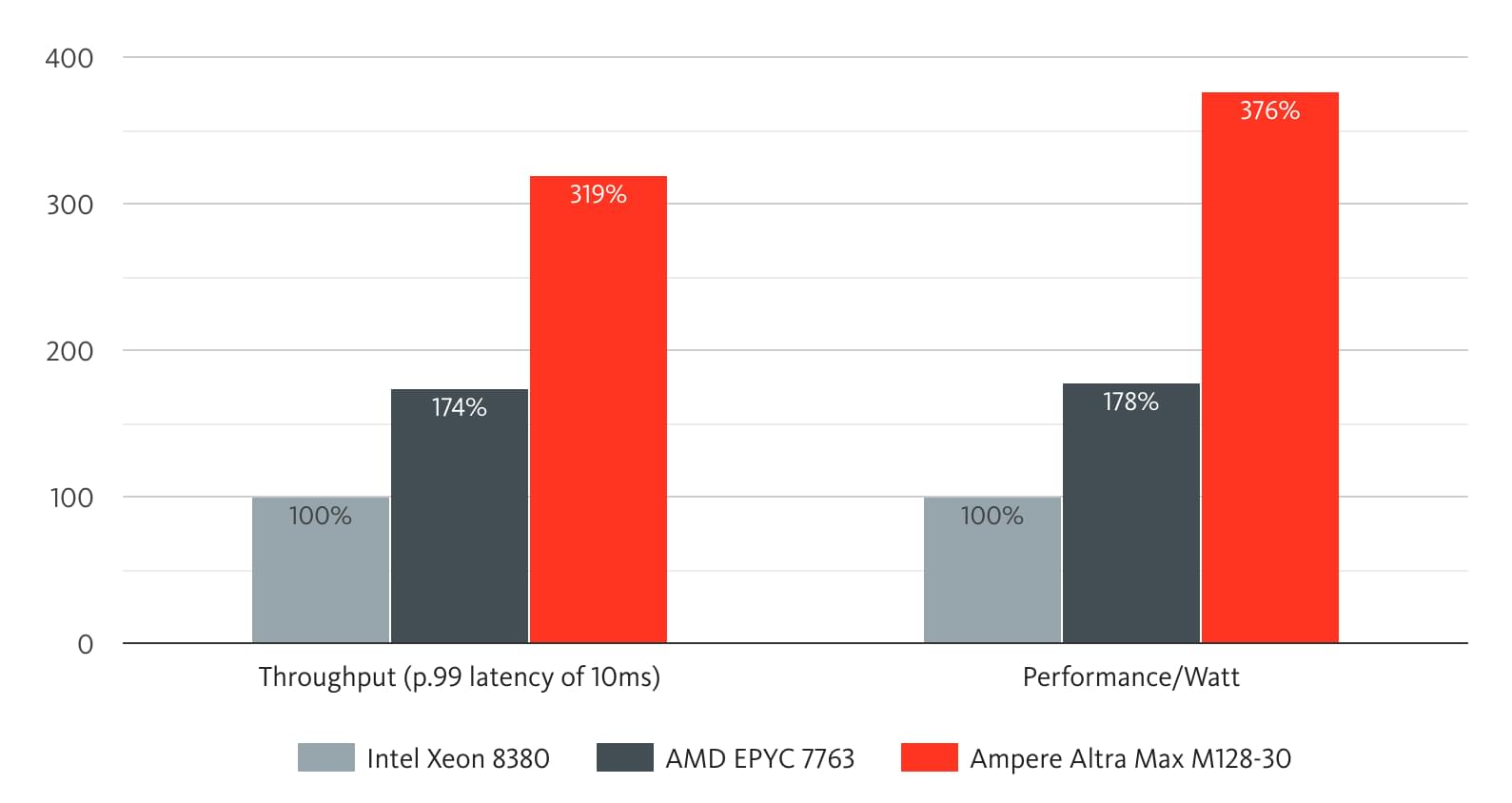

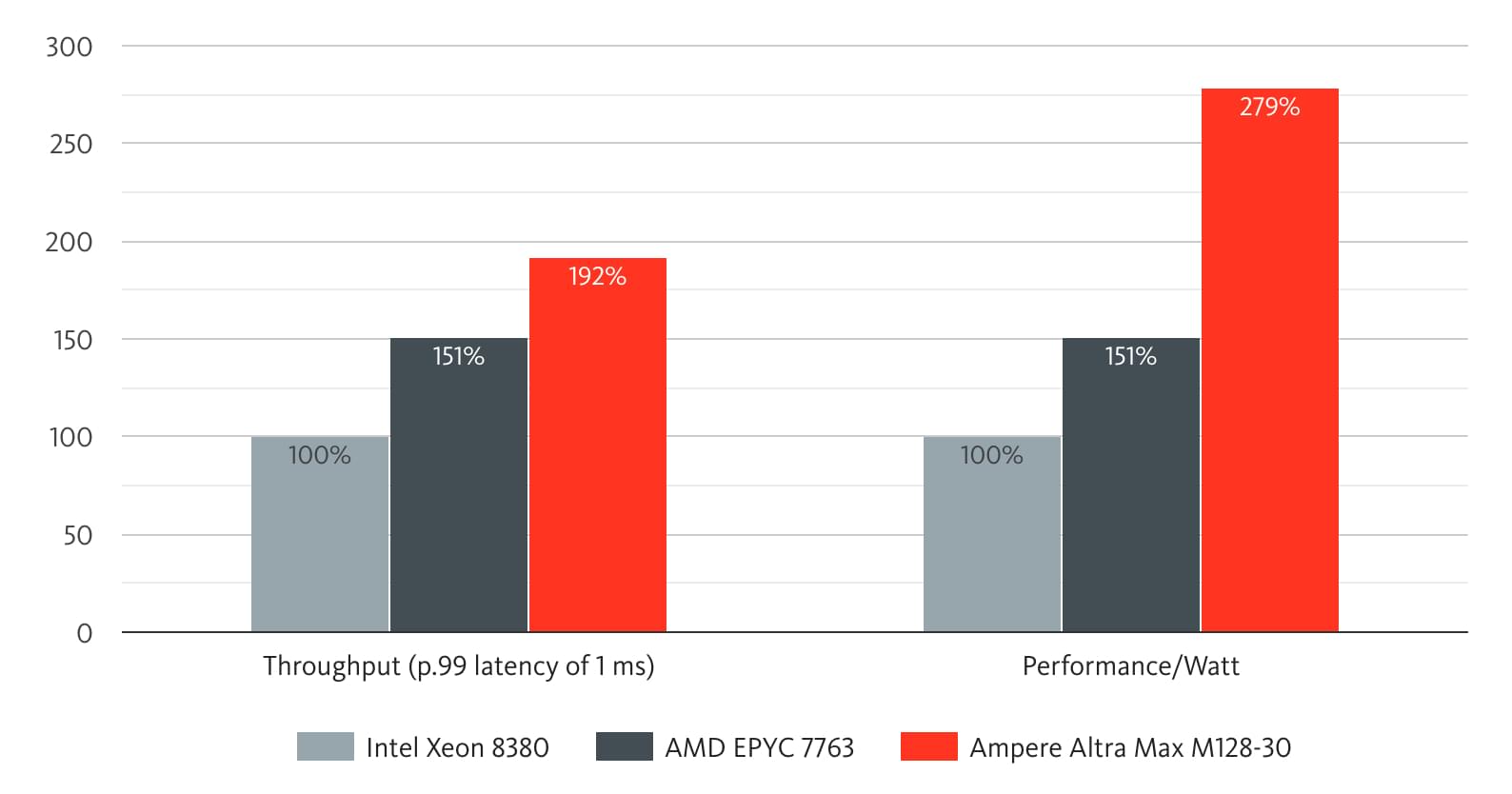

While peak performance is important, many applications must meet a specific SLA, such as providing a response within two seconds. For this reason, it’s common for cloud operations teams to measure responsiveness using P99 latencies — that is, the response time within which 99% of requests are satisfied.

To measure P99 latency, we increase the number of requests to our service to determine the point at which 99% of transactions still complete within the required SLA. This allows us to assess the maximum throughput possible while maintaining SLAs and assess the impact on performance as the number of users scales up.

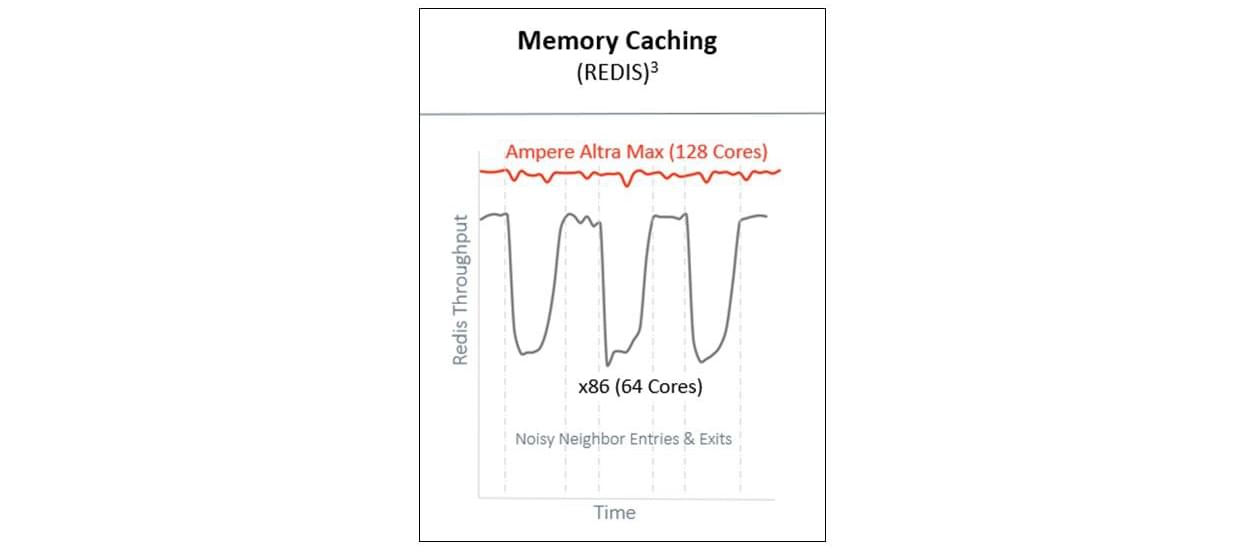

Consistency and predictability are two of the primary factors that impact overall latency and responsiveness. When task performance is more consistent, responsiveness is more predictable. In other words, the less variance in latency and performance, the more predictable the responsiveness of a task. Predictability also eases workload balancing.

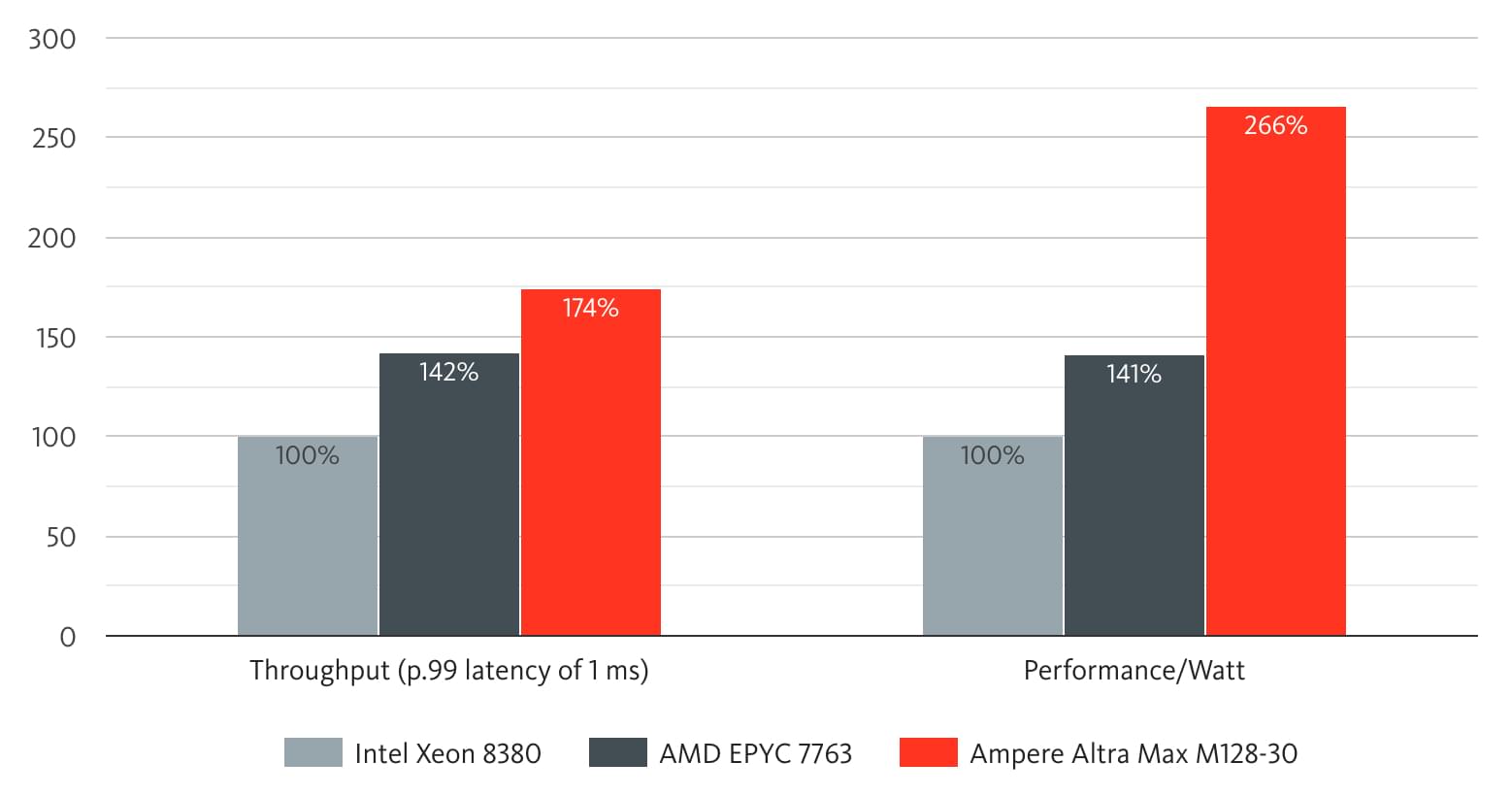

As described in Part 1 of this series, x86 cores are hyperthreaded to increase core utilization. With two threads sharing a core, it’s much harder to guarantee SLAs. By their nature, inconsistencies in hyperthreading overhead — and other x86 architecture issues — lead to a higher variance in latency between tasks when compared to an Ampere Cloud Native Processor. Because of this difference, x86-based platforms can maintain a high peak performance but exceed SLAs much sooner due to high latency variance (see Figure 2). In addition, the tighter the SLA (that is, seconds vs milliseconds), the more this variance negatively impacts P99 latency and responsiveness.

Figure 2: Hyperthreading and other x86 architectural issues lead to higher variance in latency that negatively impact throughput and SLAs. Image from Sustainability at the Core with Cloud Native Processors.

In this case, the only way to reduce latency is to lower the rate of requests. In other words, to guarantee SLAs, you must allocate more x86 resources to ensure that each core runs at a lower load to account for higher variability in responsiveness between threads under high loads. Thus, an x86-based application is more limited in the number of requests it can manage and still maintain its SLA.

NGINX Performance and Energy Efficiency

The higher performance efficiency of a cloud native platform results in less variance between tasks, leading to overall greater consistency and less impact on responsiveness — even when you increase the request rate and increase utilization. Because of its greater consistency, an Ampere Cloud Native Processor can handle many more requests, depending upon the application, without compromising responsiveness.

Redis Performance and Energy Efficiency

h.264 Media Encoding Performance and Energy Efficiency

Memcached Performance and Energy Efficiency

Better Performance Per Dollar with Cloud Native

The ability of a cloud native approach to deliver consistent responsiveness to an SLA with higher performance in a reproducible manner also means superior price/performance. This directly reduces operating costs as more requests can be managed by fewer cores. In short, a cloud native platform enables your applications to do more with fewer cores without compromising SLAs. Increased utilization translates directly to lower operating costs — since you’ll need fewer cloud native cores to manage an equivalent load compared to an x86 platform.

So, how much do you save? The basic unit of compute in the cloud is the vCPU. However, with x86-based platforms, each x86 core runs two threads, so if you want to disable hyperthreading, you must rent x86 vCPUs in pairs. Otherwise, an application gets to share an x86 core with another application.

On a cloud native platform, when you rent vCPUs, you are allocated entire cores. When you consider that 1) a single Ampere-based vCPU on a Cloud Service Provider (CSP) gives you a full Ampere core, 2) Ampere provides many more cores per socket with corresponding higher performance per Watt, and 3) Ampere vCPUs typically cost less per hour because of higher core density and reduced operating costs, this results in a cost/performance advantage on the order of 4.28X for an Ampere cloud native platform for certain cloud native workloads.

Higher Power Efficiency, Better Sustainability, and Lower Operating Costs

Power consumption is a global concern, and managing power is quickly becoming one of the major challenges for cloud service providers. Currently, data centers consume between 1% and 3% of electricity worldwide, and this percentage is expected to double by 2032. In 2022, cloud data centers were expected to have accounted for 80% of this energy demand.

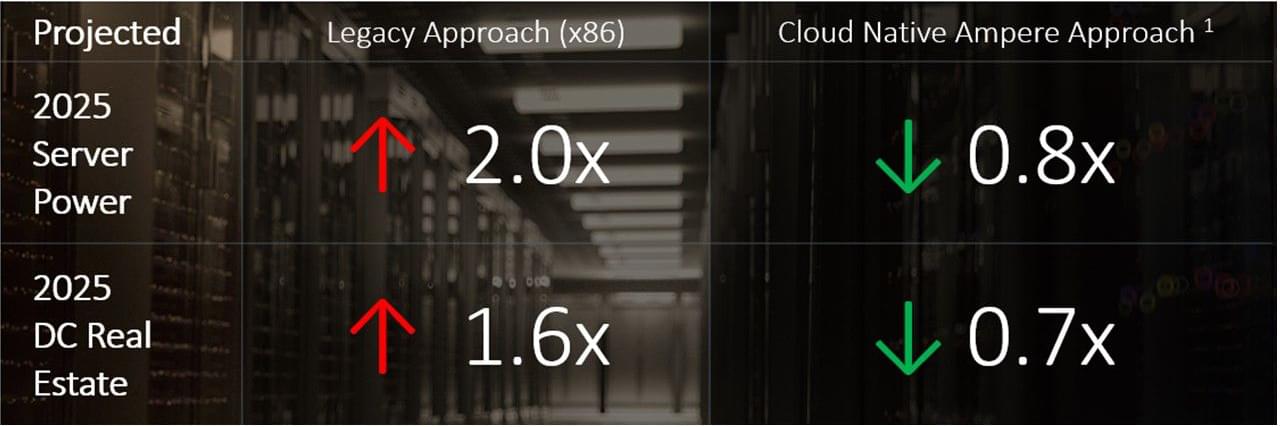

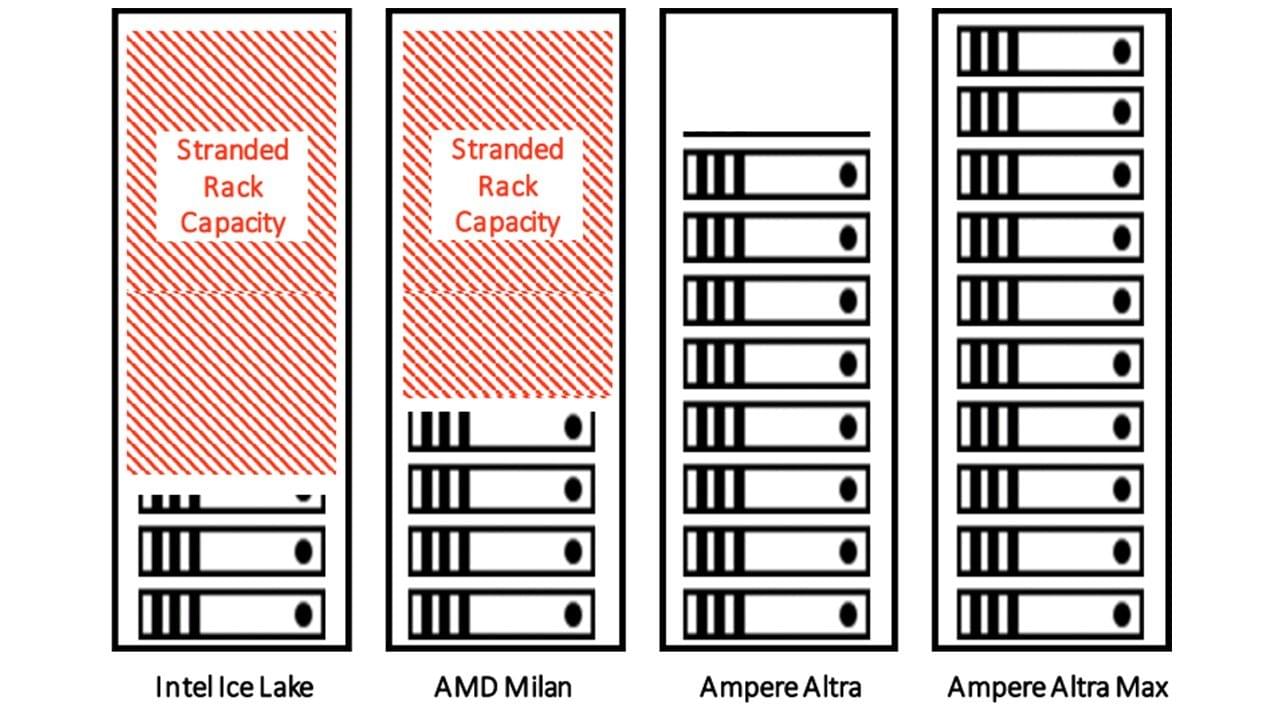

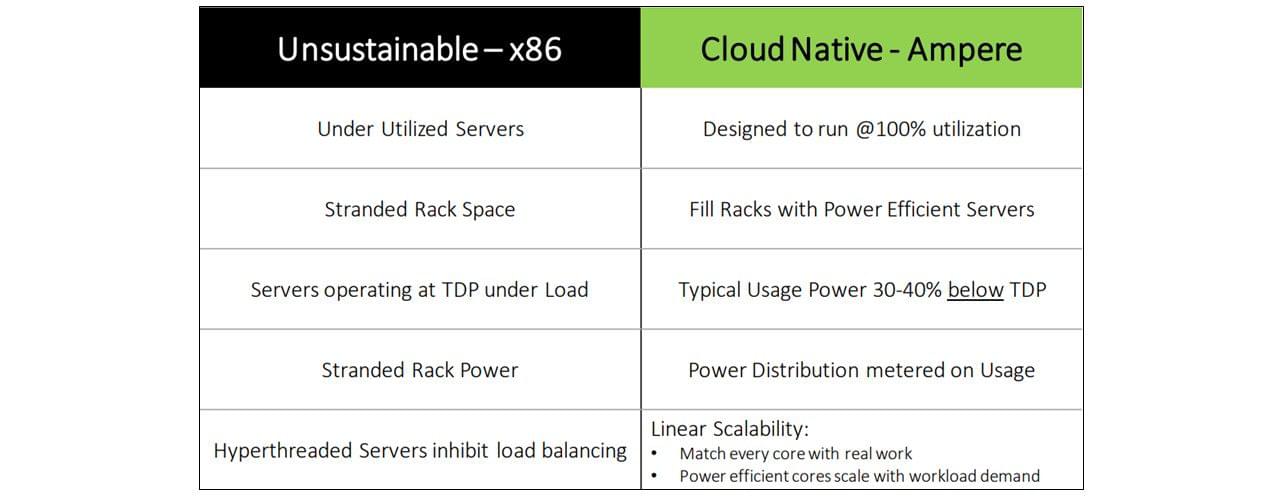

Because their architecture has evolved for different use-cases over 40 years, Intel x86 cores consume more power than is required for most cloud microservice-based applications. In addition, the power budget for a rack and the heat dissipation from these cores is such that a CSP cannot fill a rack with x86 servers. Given the power and cooling constraints of x86 processors, CSPs may need to leave empty space in the rack, wasting valuable real estate. In fact, by 2025, a legacy approach (x86) to cloud is expected to double data center power needs and increase real estate needs by a factor of 1.6X.

Figure 7: Power and real estate required to continue anticipated data center growth. Image from Sustainability at the Core with Cloud Native Processors.

Considering cost and performance, it’s clear that cloud computing needs to shift away from general-purpose x86 compute to more power efficient and higher performance cloud native platforms. Specifically, we need greater core density in the data center with high performance cores that are more efficient, require less expensive cooling, and lower overall operating costs.

Because the Ampere cloud native platform is designed specifically for power efficiency, applications consume much less power without compromising performance or responsiveness. Figure 8 below shows the power consumption of workloads at scale running on both an x86-based and the Ampere cloud native platform. Depending upon the application, power efficiency — as measured by performance per Watt — is significantly higher with Ampere than with an x86 platform.

Figure 8: The Ampere cloud native platform delivers significantly higher power efficiency compared to x86 platforms across key cloud workloads. Image from Sustainability at the Core with Cloud Native Processors.

The low power architecture of cloud native platforms enables higher cores per rack density. For example, the high core count of Ampere® Altra® (80 cores) and Altra Max (128 cores) enables CSPs to achieve incredible core density. With Altra Max, a 1U chassis with two sockets can have 256 cores in a single rack (see Figure 8).

Using Cloud Native Processors, developers and architects need no longer choose between low power and great performance. The architecture of the Altra family of processors delivers greater compute capacity — up to 2.5x greater performance per rack — and up to a three-fold reduction in the number of racks required for the same compute performance of legacy x86 processors. The efficient architecture of Cloud Native Processors also delivers the best price per Watt in the industry.

Figure 9: The power inefficiency of x86 platforms leaves stranded rack capacity while the power efficiency of Ampere Altra Max utilizes all available real estate.

The benefits are impressive. Cloud native applications running in an Ampere-based cloud data center could decrease power requirements to an estimated 80% of current usage by 2025. At the same time, real estate requirements are estimated to drop by 70% (see Figure 7 above). The Ampere cloud native platform provides a 3x performance per Watt advantage, effectively tripling the capacity of data centers for the same power footprint.

Note that this cloud native approach doesn’t require advanced liquid cooling technology. While liquid cooling does make it possible to increase the density of x86 cores in a rack, it comes at a higher cost without introducing new value. Cloud native platforms push the need for such advanced cooling further into the future by enabling CSPs to do more with the existing real estate and power capacity they already have.

The power efficiency of a cloud native platform means a more sustainable cloud deployment (see Figure 10 below). It also allows companies to reduce their carbon footprint, a consideration that is becoming increasingly important to stakeholders such as investors and consumers. At the same time, CSPs will be able to support more compute to meet increasing demand within their existing real estate capacity and power limits. To provide additional competitive value, CSPs looking to expand their cloud native market will incorporate power expenses into compute resource pricing — resulting in a competitive advantage for cloud native platforms.

Figure 10: Why cloud native compute is fundamental to sustainability. Image from Sustainability at the Core with Cloud Native Processors.

Improved Responsiveness and Performance at Scale with Cloud Native

The cloud enables companies to step away from large monolithic applications to application components — or microservices — that can scale by making more copies of components as needed. Because these cloud native applications are distributed in nature and designed for cloud deployment, they can scale out to 100,000s of users seamlessly on a cloud native platform.

For example, if you deploy multiple MYSQL containers, you want to ensure that every container has consistent performance. With Ampere, each application gets its own core. There is no need to verify isolation from another thread and no overhead for managing hyperthreading. Instead, each application provides consistent, predictable, and repeatable performance with seamless scaling.

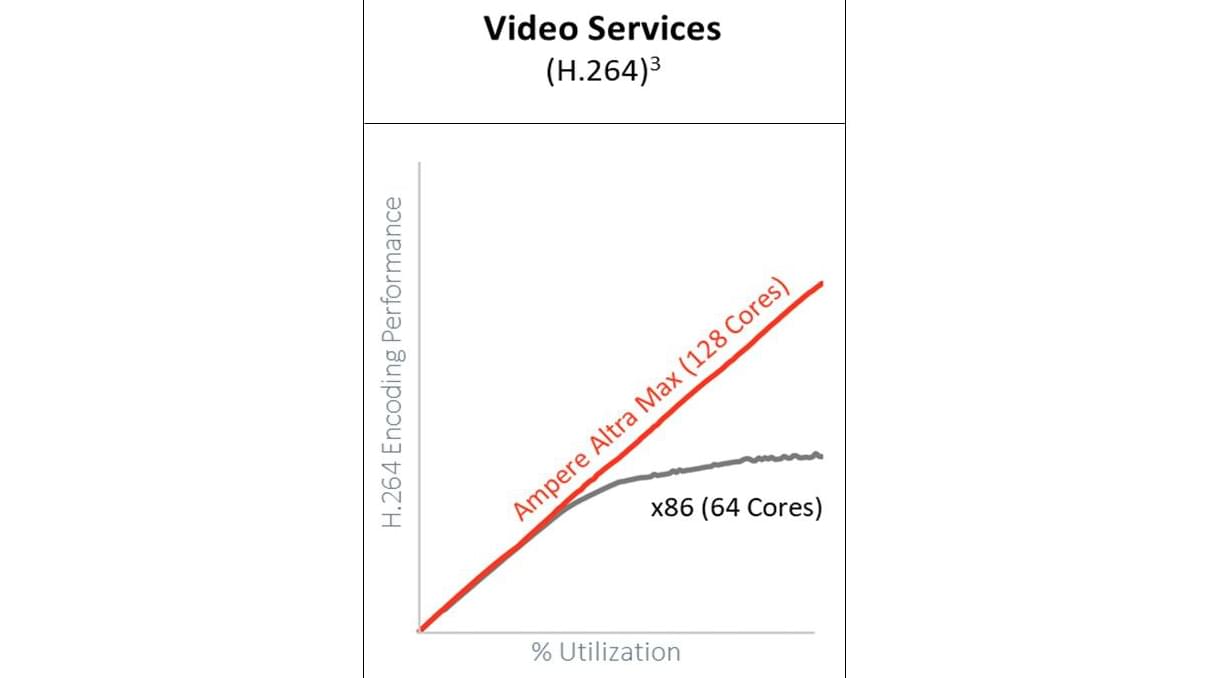

Another advantage of going cloud native is linear scalability. In short, each cloud native core increases performance in a linear manner, compared to x86 performance — which drops off as utilization increases. Figure 11 below illustrates this for H.264 encoding.

Figure 11: Ampere cloud native compute scales linearly, leaving no stranded capacity, unlike x86 compute. Image from Sustainability at the Core with Cloud Native Processors.

The Cloud Native Advantage

It’s clear that current x86 technology will be unable to meet increasingly stricter power constraints and regulations. Because of their efficient architecture, Ampere cloud native platforms provide up to 2x higher performance per core than x86 architectures. In addition, lower latency variance leads to greater consistency, more predictability, and better responsiveness — allowing you to meet SLAs without needing to significantly overprovision compute resources. The streamlined architecture of cloud native platforms also results in better power efficiency, leading to more sustainable operations and lower operating costs.

The proof of cloud native efficiency and scalability is best seen during high loads, such as serving 100,000 users. This is where the consistency of Ampere’s cloud native platform yields tremendous benefits, with up to 4.28x price/performance over x86, while still maintaining customer SLAs, for Cloud Native applications at scale.

In Part 5 of this series, we’ll cover how you can engage with a partner to begin taking advantage of cloud native platforms immediately with minimal investment or risk.

Check out the Ampere Computing Developer Centre for more relevant content and latest news. You can also sign up for the Ampere Computing Developer Newsletter, or join the Ampere Computing Developer Community.

We created this article in partnership with Ampere Computing. Thank you for supporting the partners who make SitePoint possible.