How to Combine ‘Gut Feel’ & Science in Multivariate Testing

As site owners, designers, and developers we often underestimate the value of our intuition.

We prioritize data-driven decisions over instinct when it comes to virtually everything: from optimizing our monetization strategies to styling buttons on our websites. However, we often achieve better results if we learn to complement, rather than substitute our gut feeling with tests and data.

The ability to combine intuition and data-driven thinking is crucial for multivariate testing (MVT). Although site testing is viewed as a “mechanical” process, we often come up with purely intuitive ideas when choosing the features and design elements for further tests.

A typical MVT procedure includes the core stages of the scientific method: question, research, hypothesis, experiment and troubleshooting, data analysis, and implementation. You can increase the value of your ideas by using these stages as a framework for your experiment.

Running Usability Tests to Ask the Right Questions

Start your MVT experiment with the right question. In most cases, asking yourself what exactly you can improve is a good starting point.

As a rule, our intuition makes us visualize dozens of useful tweaks, but we need to concentrate on features which lead to substantial improvements. Running a usability test prior to an MVT experiment is a viable way of reinforcing intuition with concrete data. Depending on your budget you have several options to choose from:

- Hiring experts to perform heuristic evaluation of your website;

- Joining a crowdsourcing testing platform like Peek, FiveSecondTest, or Concept Feedback to have your site reviewed by end-users;

- Getting creative with non-standard UX testing services such as “The User is Drunk” or “The User is My Mom” from Richard Littauer.

Let’s take a look at an example:

When launching native advertising on their websites, publishers often choose full-sized ad units that mimic the structure of their posts. This way the ad gets more prominence.

However, sometimes the ad feels too large, and it is more than just an aesthetic issue. Oversized adverts of this type can erode user experience leading to a decrease of click-through rates (CTR).

Conducting a usability study will most probably confirm our guess — oversized in-feed ads tend to be the first UX-related problem users notice. An obvious solution for this problem would be to test smaller native ad units.

Using Competitor Analysis for Background Research

Conduct background research to ensure a meaningful outcome for your tests. There is a valid reason for you to use competitor analysis at this stage. This type of research often comes down to a brief review of competitor websites with top search rankings, which doesn't take much time.

Studying your competitors will help you better understand what UX solutions work for similar websites. Later on, you will determine if your own ideas work better than these solutions.

If this is the case, you will gain a competitive edge over similar online resources. If not, you will still get to understand what works best for similar websites.

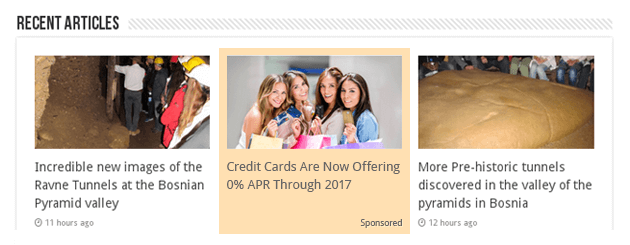

Competitor analysis will add clarity to the example above as well. It's easy to see that the most widespread alternative for an in-feed ad is a compact preview of the same branded post. These previews typically appear alongside recommended content, and are styled similarly, except for minor differences. This is what an average native ad of this type would look like in the “recent articles” section:

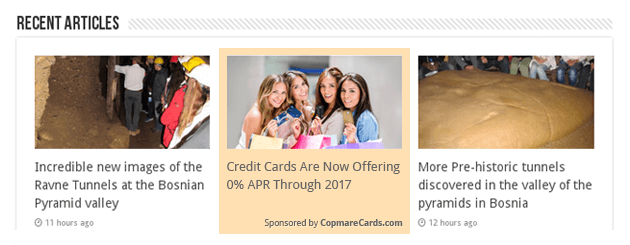

In a number of cases, publishers mention the advertiser in an explicit manner:

Constructing a Valid Hypothesis

The hypothesis is the idea you will be testing. In fact, it is likely that your gut feeling will provide you with several worthy ideas.

In this case, you will need to find out which of them have the most practical advantages over your competitors’ features. To select the right items, give proper consideration to these three points:

- Try to predict how your audience will react to your solution. There is plenty of information on the psychology behind different color patterns, shapes, and layouts to help you make precise forecasts.

- Find out if you ideas correspond to the current trends and/or enable you to make use of new technologies.

- Make sure the options you test are suitable for your site in terms of design.

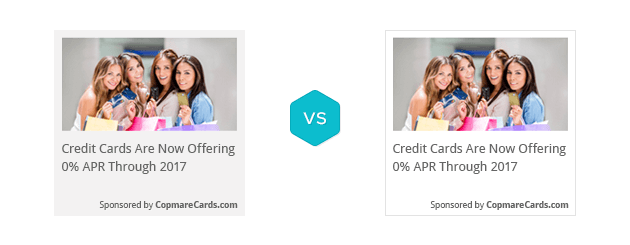

Let’s see, how these points align with the above example. Background color helps the ad stand out, but it may lead some users to mistake the branded post for a banner. To avoid confusion, it will prove worthy to either opt for a less contrasting background or to completely remove it:

To find out which option suits us better, we can apply the three criteria from above:

- Even though the background is less vivid, the ad still resembles a banner. This may confuse the visitors because banners and native ads invoke vastly different types of experiences.

- The practice of highlighting native ad backgrounds originates from the old design of paid search results. This design pattern is now considered obsolete by major publishers.

- Although both variants fit into the overall design of the website, the ad with no background will make the “recent articles” section visually solid.

Consequently, the “no background” version suits us better.

Streamlining the Test Procedure

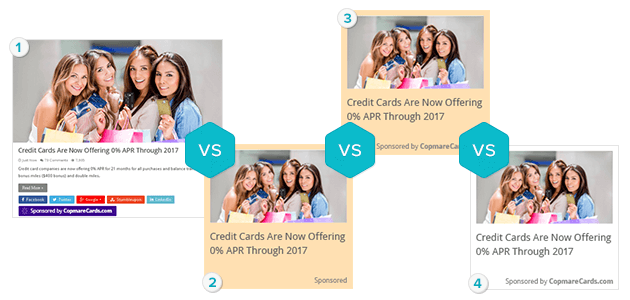

Include your current solution, the most widespread competitors’ solutions, as well as your own idea into the test. Most MVT experiments imply equal distribution of traffic between several samples. This approach requires high traffic volume, so you will need to keep the number of test samples to a rational minimum:

Another method the Epom Ad Server team uses is to exclude the winning combination as soon as it outstrips other options by a considerable degree (e.g. by 20%). After excluding the best combination we redistribute the remaining traffic between other samples. This allows us to get a more detailed comparison of separate features over the same period of time.

If sample 4 becomes the obvious winner before the planned end of the test, we can redistribute its traffic between samples 2 and 3. This allows us to see, if mentioning the advertiser actually makes any difference:

While there are other ways to distribute traffic, the two methods outlined above use direct calculation instead of relying on approximate figures. To further increase the degree of precision and eliminate the most widespread causes for troubleshooting, optimize your site analytics beforehand, preview all samples, and decide on the optimal duration of your experiment.

Analysing and Interpreting the Data

Multivariate tests are quite easy to analyze and interpret: the top-performing sample is the winner. However, in some cases our intuition tells us something is wrong with the results. More often than not, there is much more to such doubts than gut feeling.

There is an article on Sitepoint which argues that most winning results of A/B tests are misleading. It is safe to assume that MVT has similar problems. The key reasons behind misconstrued A/B and MVT tests include insufficient experiment duration and traffic volume as well as failure to understand the audience. Additionally, you need to consider a couple of factors to guarantee a valid interpretation of your data:

- Double check that you have defined the right key performance indicators (KPI) for your testing campaign. For instance, CTR is an optimal metric for the native ad from the above examples, as we are interested in the number of users willing to read the full branded post.

- Make sure that the difference between the test results is big enough for you to draw reliable conclusions. In the example, this would also apply to samples 2 and 3, because we need to make sure that inclusion of advertiser is a sound decision.

The Bottom Line

Intuition is vital to many aspects of website development and digital marketing, including such “mechanical” procedures as multivariate testing. The ability to put you intuition into action will help you create unique, valuable online experience for your audience. The ideas we come up with often have great potential, and multivariate testing is a great tool to justify these ideas.

Follow the stages of the scientific method and conduct additional research to ensure your tests return meaningful data. Usability testing and competitor analysis help you further increase the precision of an MVT procedure, which makes them invaluable for a well-planned experiment execution.