Using Android Text-to-Speech to Create a Smart Assistant

In this tutorial I will show you how to create a simple Android App that listens to the speech of a user and converts it to text. The app will then analyze the text and use it as a command to store data or answer the user.

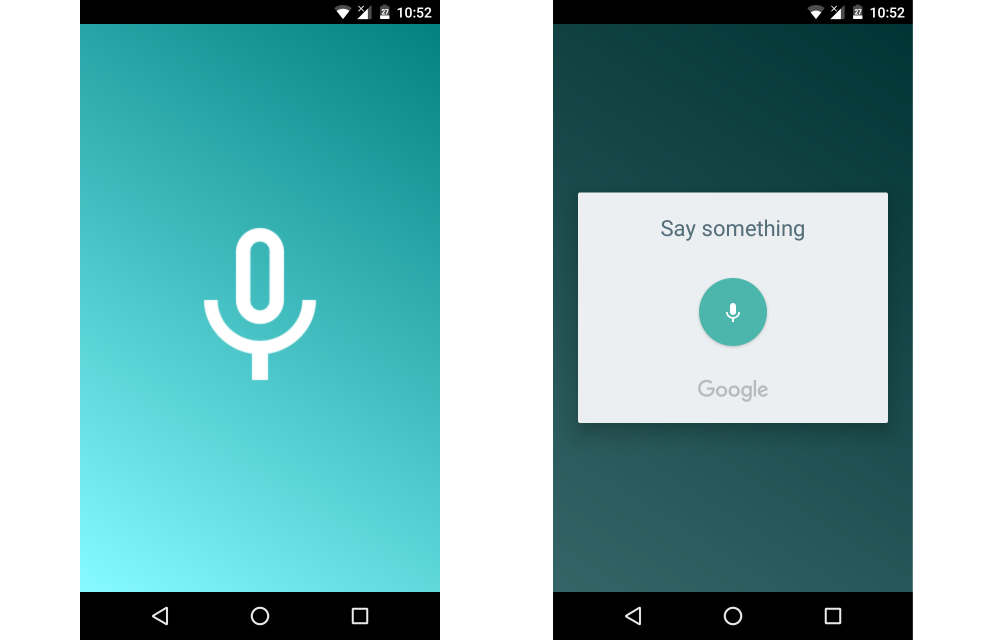

The application user interface is simple, just a single ImageButton in the center of a full screen gradient background. Each time a user speaks, they press the button and talk.

You can find the final project on Github.

Create Application

Create a new project in android Studio, choosing a minimum API level of 18 and adding an Empty Activity. This will be the only activity in the project.

To make the view full screen, open AndroidManifest.xml and set android:theme="@style/Theme.AppCompat.NoActionBar". This will hide the ActionBar from our Activity.

You now have a full screen white layout with an TextView inside. To improve it, add a gradient shape will to the RelativeLayout

Right click on the drawable folder and select New -> Drawable resource file. Call it ‘background’ and replace the code with this:

<?xml version="1.0" encoding="UTF-8"?>

<shape xmlns:android="http://schemas.android.com/apk/res/android"

android:shape="rectangle" >

<gradient

android:type="linear"

android:startColor="#FF85FBFF"

android:endColor="#FF008080"

android:angle="45"/>

</shape>

Feel free to change the colors and angle to your own.

The ImageButton inside the layout uses an image from Material Design Icons. Download and add it as a src.

Update the code inside activity_main.xml to:

<?xml version="1.0" encoding="utf-8"?>

<RelativeLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:background="@drawable/background"

android:id="@+id/rel"

tools:context="com.example.theodhor.speechapplication.MainActivity">

<ImageButton

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:id="@+id/microphoneButton"

android:layout_centerVertical="true"

android:layout_centerHorizontal="true"

android:src="@drawable/ic_mic_none_white_48dp"

android:background="@null"/>

</RelativeLayout>

Speaking

Now the user interface is complete, the next step is the Java code inside MainActivity.

Declare a TextToSpeech variable above the onCreate method:

private TextToSpeech tts;

Inside onCreate add:

tts = new TextToSpeech(this, new TextToSpeech.OnInitListener() {

@Override

public void onInit(int status) {

if (status == TextToSpeech.SUCCESS) {

int result = tts.setLanguage(Locale.US);

if (result == TextToSpeech.LANG_MISSING_DATA || result == TextToSpeech.LANG_NOT_SUPPORTED) {

Log.e("TTS", "This Language is not supported");

}

speak("Hello");

} else {

Log.e("TTS", "Initilization Failed!");

}

}

});

This starts the TextToSpeech service. The speak() method takes a String parameter, which is the text you want Android to speak.

Create the method and add this code:

private void speak(String text){

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.LOLLIPOP) {

tts.speak(text, TextToSpeech.QUEUE_FLUSH, null, null);

}else{

tts.speak(text, TextToSpeech.QUEUE_FLUSH, null);

}

}

There’s a Build.VERSION check inside the method because tts.speak(param,param,param) is deprecated for API levels over 5.1

After speak() create another method to stop the TextToSpeech service when a user closes the app:

@Override

public void onDestroy() {

if (tts != null) {

tts.stop();

tts.shutdown();

}

super.onDestroy();

}

At this stage, the application speaks “Hello” once started. The next step is to make it listen.

Listening

To make the application listen, you will use the microphone button. Add this code to onCreate:

findViewById(R.id.microphoneButton).setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

listen();

}

});

Clicking the ImageButton function will call this function:

private void listen(){

Intent i = new Intent(RecognizerIntent.ACTION_RECOGNIZE_SPEECH);

i.putExtra(RecognizerIntent.EXTRA_LANGUAGE_MODEL, RecognizerIntent.LANGUAGE_MODEL_FREE_FORM);

i.putExtra(RecognizerIntent.EXTRA_LANGUAGE, Locale.getDefault());

i.putExtra(RecognizerIntent.EXTRA_PROMPT, "Say something");

try {

startActivityForResult(i, 100);

} catch (ActivityNotFoundException a) {

Toast.makeText(MainActivity.this, "Your device doesn't support Speech Recognition", Toast.LENGTH_SHORT).show();

}

}

This method starts the listening activity which displays as a dialog with a text prompt. The language of the speech is taken from the device, via the Locale.getDefault() method.

The startActivityForResult(i, 100) method waits for the current activity to return a result. 100 is a random code attached to the started activity, and can be any number that suits your use case. When a result returns from the started activity, it contains this code and uses it to differentiate multiple results from each other.

To catch the result from a started activity, add this overriden method:

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

super.onActivityResult(requestCode, resultCode, data);

if(requestCode == 100){

if (resultCode == RESULT_OK && null != data) {

ArrayList<String> res = data.getStringArrayListExtra(RecognizerIntent.EXTRA_RESULTS);

String inSpeech = res.get(0);

recognition(inSpeech);

}

}

}

This method catches every result coming from an activity and uses the requestCode for the speech recognizer result. If requestCode is equal to 100,resultCode equals OK and the data from this result is not null. You get the result string from res.get(0)

Create a new method called recognition which will take a String as a parameter:

private void recognition(String text){

Log.e("Speech",""+text);

}

At this stage, the application can listen after a user clicks the microphone button, and converts the user’s speech to text. The result is printed in the Error Log.

Learning

To make the app more interesting, in this step you are going to make the application able learn simple things, like your name. To make this possible, you need to use local storage.

Add these lines above the onCreate method:

private SharedPreferences preferences;

private SharedPreferences.Editor editor;

private static final String PREFS = "prefs";

private static final String NAME = "name";

private static final String AGE = "age";

private static final String AS_NAME = "as_name";

Then, inside onCreate add:

preferences = getSharedPreferences(PREFS,0);

editor = preferences.edit();

First you need to make the app ask a question, so change speak("Hello") to speak("What is your name?")

You can use a simple logic here, so when someone asks “What is your name?”, the answer is “My name is Dori.”, taking the name from the answer. A simple way is to split the answer’s string by spaces (” “) and get the value of the last index.

Update the code in the recognition method:

private void recognition(String text){

Log.e("Speech",""+text);

//creating an array which contains the words of the answer

String[] speech = text.split(" ");

//the last word is our name

String name = speech[speech.length-1];

//we got the name, we can put it in local storage and save changes

editor.putString(NAME,name).apply();

//make the app tell our name

speak("Your name is "+preferences.getString(NAME,null));

}

The recognition method uses all the results from the user’s speech. Since the speech may be different, you can differentiate them using certain words they might contain.

For example, the code inside this method could be:

private void recognition(String text){

Log.e("Speech",""+text);

String[] speech = text.split(" ");

//if the speech contains these words, the user is saying their name

if(text.contains("my name is")){

String name = speech[speech.length-1];

Log.e("Your name", "" + name);

editor.putString(NAME,name).apply();

speak("Your name is "+preferences.getString(NAME,null));

}

}

But this is still a simple interaction with the app. You could make it learn your age, or even give it a name.

Inside the same method, try these simple conditions:

//This must be the age

//Just speak: I am x years old.

if(text.contains("years") && text.contains("old")){

String age = speech[speech.length-3];

Log.e("THIS", "" + age);

editor.putString(AGE, age).apply();

}

//Then ask it for your age

if(text.contains("how old am I")){

speak("You are "+preferences.getString(AGE,null)+" years old.");

}

The app can tell you the time:

//Ask: What time is it?

if(text.contains("what time is it")){

SimpleDateFormat sdfDate = new SimpleDateFormat("HH:mm");//dd/MM/yyyy

Date now = new Date();

String[] strDate = sdfDate.format(now).split(":");

if(strDate[1].contains("00"))strDate[1] = "o'clock";

speak("The time is " + sdfDate.format(now));

}

Smart Speak

In the GitHub project I have included more examples for you to experiment with and build your own Android assistant.

I hope you enjoyed this tutorial and have a useful conversation with you phone. Any questions or comments, please let me know below.