An Introduction to A/B Testing

We’ve recently published a number of articles about data-driven design, or using analytics to inform our UI designs. The short of it is that we can use analytics tools like Google Analytics to conduct user research, to learn about our user demographics and user behavior, and to identify areas of our websites that might be falling short in terms of UX.

When we know where users are having trouble, and we have a little background information as to who is having trouble, we can begin to hypothesize why. We can then employ usability testing to confirm (or disprove!) our hypotheses, and through this we can potentially even see an obvious solution. The result? Happier users, more conversions!

But what happens when the solution doesn’t become obvious through usability testing? What happens if there’s a solution for one user group, but it doesn’t quite work for another user group? You could be fixing the user experience for some users, while breaking it for another demographic.

Let me introduce you to A/B testing.

What is A/B Testing?

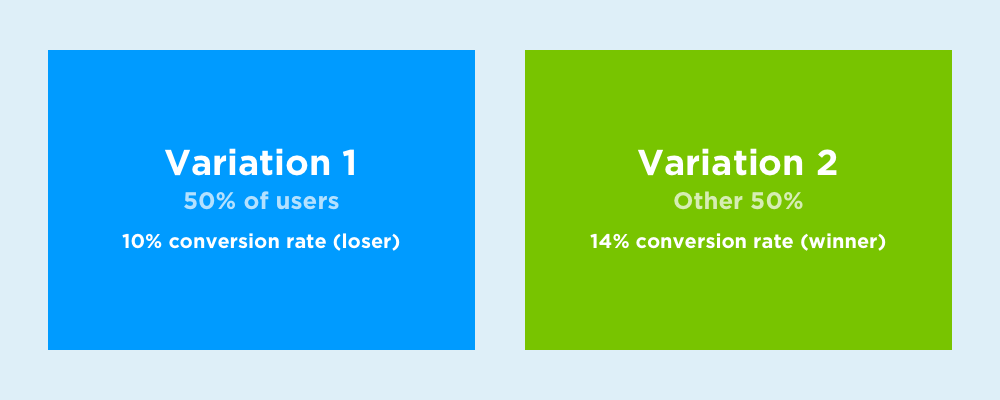

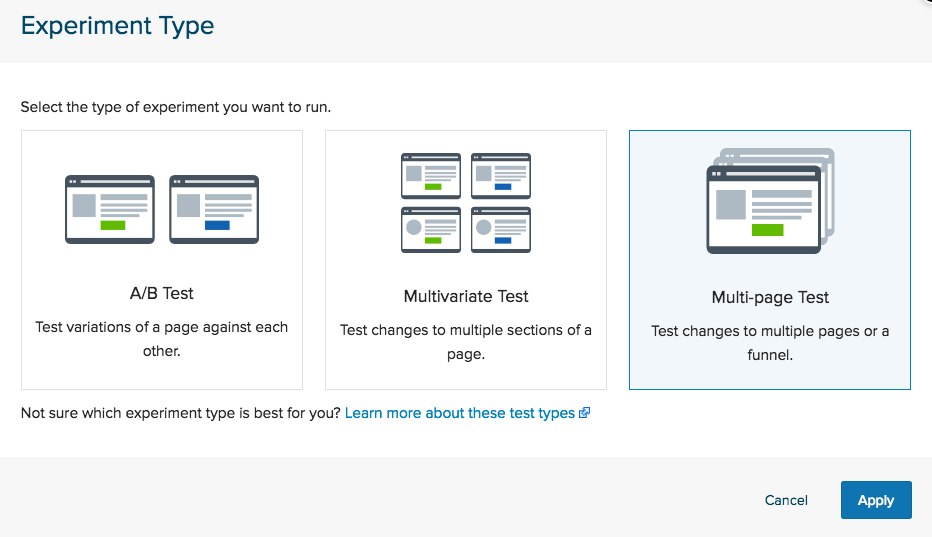

A/B testing, in short, is about implementing two variations of a design to see which one is better, where the primary metric to be measured is the number of conversions (this could be sales, signups, subscribers — whatever). There’s a methodical technique to A/B testing, and there are also tools to help us carry out these experiments in fair and unobtrusive ways.

We can also use multivariate testing, which is a subset of A/B testing, to carry out experiments when there are multiple variables to consider.

What is Multivariate Testing?

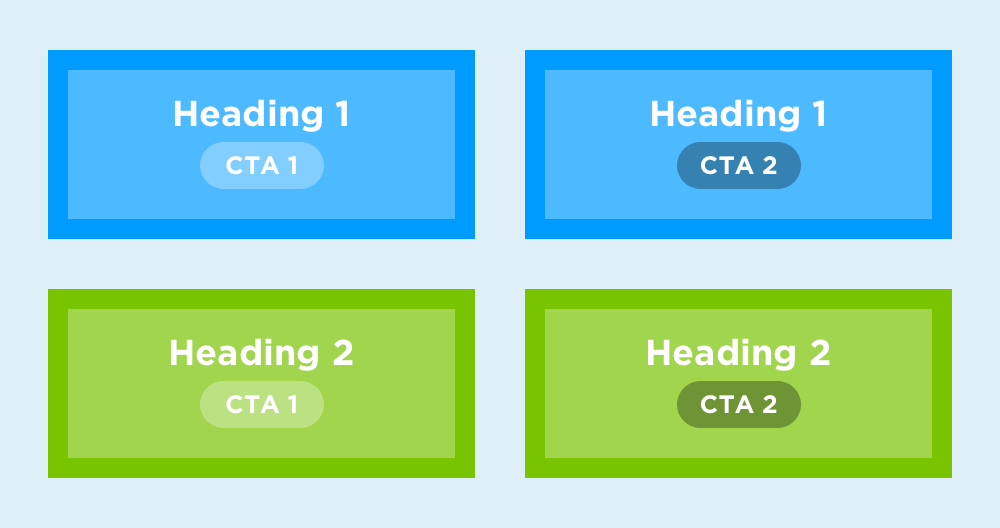

Multivariate testing is more useful for finding out what content users enjoy more, rather than which version of an interface they prefer, but that being said, content is an important aspect of UX too. Just think about it: users don’t come to your website to appreciate how intuitive your navigation is. UX is what the users need; content is what the users want (and what they came for).

Consider a heading + CTA that’s the main conversion funnel on a home page. Let’s say we have various headings and CTA options, and we want to test them all in a variety of different combinations at random. That’s where multivariate testing might be used in preference to the standard A/B testing.

What Tools Are Needed for A/B Testing?

Essentially, you can conduct A/B testing by recording the results of one version, implementing another version, and then recording the results of that version as well. However, there are some critical flaws to address:

- Your traffic/audience could change in-between the two tests

- You’ll have to analyze the data twice, and compare results manually

- You might want to segment tests to specific user groups.

Ideally, we want the results of both tests to display side by side, so that we can analyze them comparatively, and we also want both experiments to be active at the same time, to ensure that the experiment is conducted fairly.

A/B testing tools allow us to do exactly this.

Big 3: Google Optimize, CrazyEgg and Optimizely

While are a few notable A/B testing tools, Google Optimize, CrazyEgg and Optimizely are often considered the big three. They’re also very different in a lot of ways, so the one you choose may depend on your A/B testing needs.

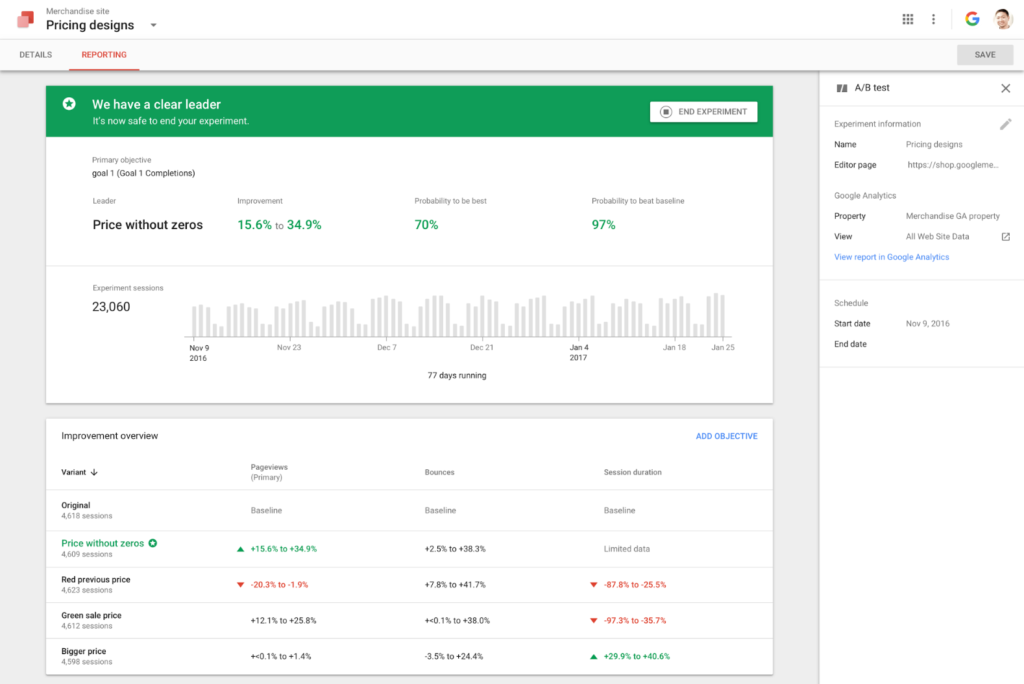

Google Optimize works in harmony with Google Analytics, and like Google Analytics, it’s free (although there’s a “Google Optimize 360” version which is aimed at enterprise teams). Much like Google Analytics, the free version is rather sophisticated and will be more than sufficient for most users.

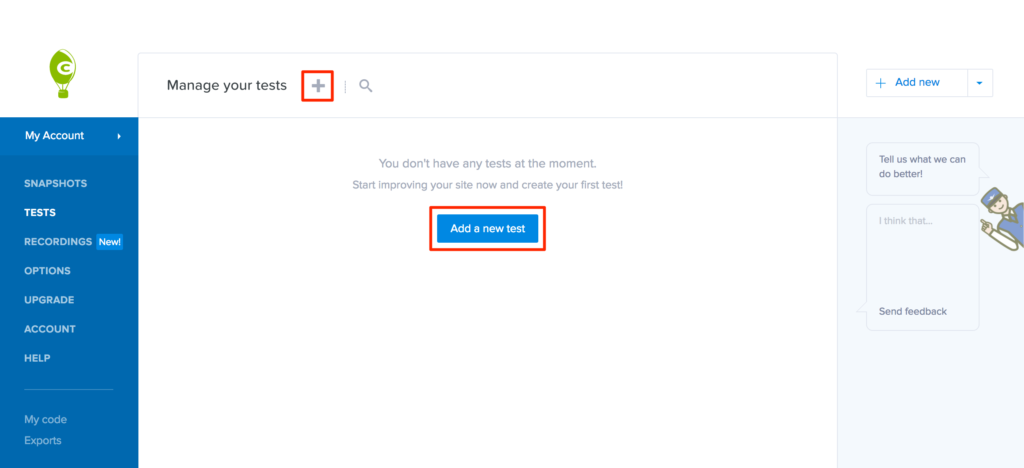

CrazyEgg actually rolls user testing (it has heatmap tools) and A/B testing into a single app, allowing you narrow down specific usability issues and then experiment with various solutions, without needing to switch apps.

Optimizely is the most complex of the three, but also the most powerful, since their SDKs allow for a more native integration across your websites, mobile apps and even TV apps (although these tools do require a developer). But that aside, Optimizely also uses A/B testing to deliver content-relevant recommendations — which convert better, because they’re targeted at audiences that are sure to enjoy those recommendations.

A/B Testing, the Right Way

When it comes to A/B testing, there’s a right way and wrong way to go about it. Where we’re most likely to drop the ball is in thinking that, if a website is converting poorly, we must design another version of it and see if it works better. Instead, we should be using analytics (and later usability testing) to identify more specific areas of improvement. Otherwise, we may end up removing aspects of the design that were in fact working, and we’ll also fail to learn what our audience really likes and dislikes about the experience.

Conclusion

Now that you know what A/B and multivariate testing are, the tools that can be used to carry out A/B testing, and how to conduct A/B experiments in a structured and progressive way, you can begin to take a more data-driven approach to web design (or any design, really).

If you’d like to learn more about analytics, I’d suggest checking out the other articles in our analytics series. Identifying causes for concern beforehand will help you figure out where you need to focus your usability and A/B testing efforts.