What the Meta 2 Means for Augmented Reality Developers

Augmented reality is going to be one of the next big waves in computing. When it hits its true potential, it is going to change how we all use and think about technology. The Meta 2 Development Kit announced last week is one of the closest glimpses at this potential that we have seen so far. While there are a few articles out there talking about the new headset announcement in general, I wanted to really focus in on what this means for developers.

For about a year and a half now, I have been lucky enough to have a Meta 1 Developer Kit and have spent a bunch of time experimenting and putting together my own concept prototype app. The SDK makes the process in Unity a lot easier than many expect it would be. The Meta 2 looks like it will be leaps and bounds ahead of the device I’ve spent countless hours tinkering with!

What is Meta?

Meta are a Silicon Valley based company who’ve been working on augmented reality since a successful Kickstarter campaign in May 2013. Not long after that campaign, they were also accepted into the well known Y Combinator program. In September 2014, they began shipping the Meta 1 Developer Kit to developers around the world. This kit looked like so:

The Meta 1 provides an augmented reality headset experience where 3D objects are projected in front of you within a limited field of view. These objects appear as if they are in the room with you and can be touched, grabbed and pinched providing some great real world interaction! The augmented reality objects were even able to lock onto areas in the real world via square markers like this one which I use for my own AR app:

Last week, they released the details of their entirely new headset concept — the Meta 2. The Meta 2 appears to have all the capabilities mentioned above and more. It looks like this:

Improved Field of View

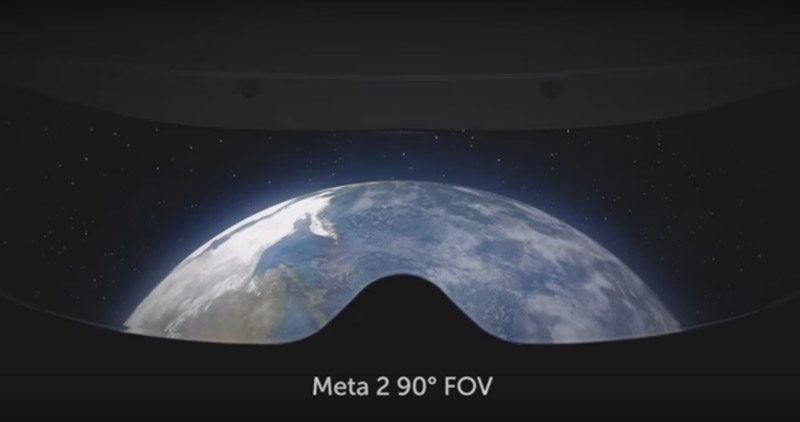

One of the challenges of AR headsets so far is getting as much of the headset view to be augmented as possible. The Meta 1, ODG R-7 Smart Glasses and Microsoft HoloLens each have a slightly limited field of view, best imagined as a transparent screen in front of your vision that reveals augmented objects when you look in their direction. The best image I have found which shows this field of view concept is this one from a Microsoft HoloLens demo video:

The Meta 1 came with two different lens hoods — one with a 23 degree field of view and another with a 32 degree field of view. The HoloLens has approximately a 30 degree field of view and ODG’s latest unreleased prototype has a 50 degree field of view. While each of those measurements might not be comparable (some are horizontal and others diagonal?), you can get a sense of the typical FOV range from those numbers.

The Meta 2 is leaps and bounds above these, with a 90 degree field of view. This means that quite a large part of the display is now augmented. Meta’s Meta 2 Product Launch Video visualizes the field of view like so:

This will give developers a great way to showcase their augmented reality apps in a more immersive way and also expands what is possible within an AR application. A greater field of view opens up more possibilities in the interface that might otherwise have been confusing with the smaller view size.

High Resolution Display

The Meta 1 provided a 960 x 540 resolution for each eye (each eye has a separate projected screen). I have seen some specs that say the Microsoft HoloLens and ODG’s R-7 Smart Glasses have a larger 1280 × 720 resolution for each eye.

The Meta 2’s display has a 2560 x 1440 DPI overall display, which is high resolution enough to be able to read text comfortably and see finer details. With the field of view, it ends up being 20 pixels per degree. I’m not quite sure how this measures up to the HoloLens and R-7 Smart Glasses, taking into account the different types of display technology.

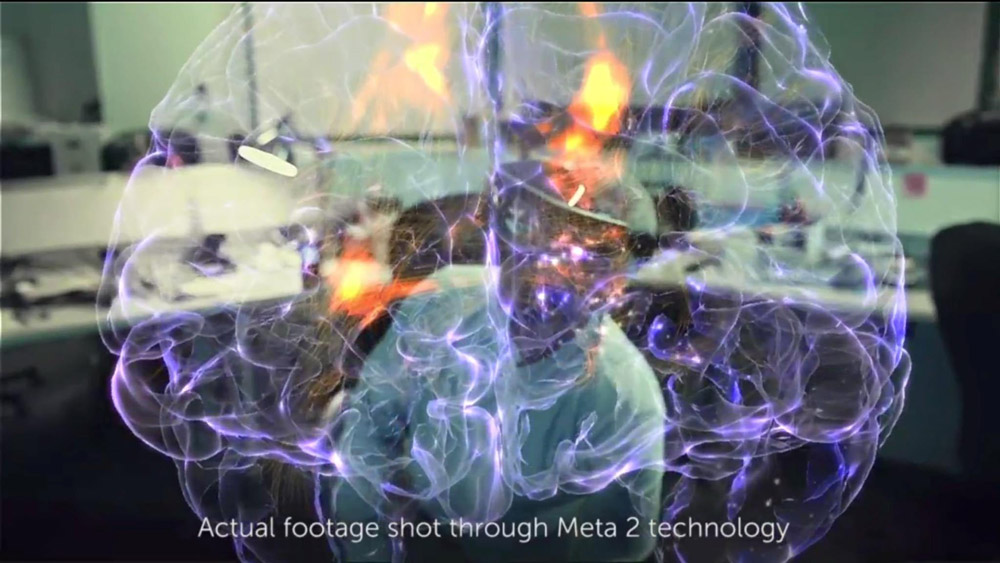

An image that shows a bit of a preview of the resolution is this one:

Source: Robert Scoble’s Facebook — he has posted up a few screens from the Meta 2 TED event, check his Facebook for a bunch more!

This is one of those areas where direct specs comparison probably isn’t helpful for developers. Instead, the potential experience is what’s going to be key here. Coupled with the 90 degree FOV, having such a fine level of detail means that your AR apps and interfaces can have clear and legible text. You can develop applications which rely on showing the finer details within your 3D models — fields that rely on precision, such as medicine, architecture and 3D modelling would definitely benefit here.

Run Full Blown Applications

The Meta 2 looks primed to replace your computer monitors as a full time display, providing an interface for virtual monitors all around you — this should excite all sorts of developers who’d love to simplify their vast array of monitors on their desk! The Meta 2 currently lists support for Windows applications including Microsoft Office, Adobe Creative Suite (imagine video production level applications in AR!) and Spotify. I can’t wait to see other apps start to become compatible too. Programming for augmented reality within augmented reality is a dream come true!

Source: Meta 2 product video

Tethered To Your PC (and Soon Mac)

One big difference between the Meta 2 and the headsets from Microsoft and ODG is that the Meta 2 needs to be tethered to your computer at all times. It currently runs on Windows 8.1 and 10, with Mac support hopefully coming later this year. The tethering seems to be a tradeoff to allow for the higher field of view, virtual monitor functionality with full blown app capabilities and so on. However, it also means no needing to worry about battery life.

For developers, this is definitely a limitation for short term use of the technology. Applications will work best when designed for uses where you stay in a certain area, rather than roaming around wearing your Meta 2 during your day-to-day activities.

As the technology advances, I’m sure Meta will look into untethering just as the HoloLens and ODG do — so you could always design apps ready for when these possibilities appear in a future headset too.

You Can Wear Glasses!

One of the biggest downfalls of headsets, especially VR headsets, is the difficulty of using them while wearing eyeglasses. Luckily, with the Meta’s new design, wearing eyeglasses isn’t an issue!

Natural Hand Interactions and Design Guidelines

The Meta 2 comes with improved hand tracking and neuroscience-driven interface design principles to guide developers in using it. The Meta team are working towards their “Neural Path of Least Resistance™” approach to computing which aims to help computing interfaces be completely intuitive for absolutely anyone to use based upon neuroscience principles.

These interface design principles and the improved hand tracking should help developers include natural interaction with augmented reality objects in a much easier way. Developers can leverage the work of the Meta neuroscience team rather than having to design and come up with these principles on the fly (not everyone is a user interface expert, especially when it comes to entirely new platforms like AR!). I’m hoping this helps AR applications built on the platform have a relatively simple and straight forward set of intuitive interaction principles as their baseline.

I do hope truly insightful developers also take these principles and expand upon them, bringing additional new design principles to this space to add to those designed by the Meta team.

Their website also says it can track objects such as a pen or paint brush, which sounds similar to Leap Motion’s item tracking — this could be very handy too in an augmented reality space!

Spacial Mapping

The Meta 2 headset has an algorithm in its arsenal (SLAM, Simultaneous Localization and Mapping) that tracks the user’s position in an environment, mapping the space around them so that objects can be placed on tables and real world surfaces. The HoloLens also has a similar capability, however paired with the Meta’s larger FOV, this could lead to some very immersive and exciting possibilities for developers!

Slightly More Affordable

The Meta 2 Headset is available for pre-order at US$949 — much more attainable for early developers on a budget than the US$3000 for HoloLens or US$2750 for the ODG R-7 Smart Glasses. The HoloLens and ODG R-7 Smart Glasses are self-contained computer systems, which increases the price significantly. Many developers may be much more comfortable paying about a third of the price to get in and build AR applications on a tethered system to get started.

Works With Unity

Meta application development works via a Unity SDK. This is a big draw for me — the time I spent getting well versed in Unity during my own Meta application development was also especially handy when it came to building experiences for other platforms like virtual reality. It also means many developers who are already skilled in Unity will be able to get into Meta development without needing to learn an entirely new development platform.

Conclusion

The augmented reality space is exciting right now — Meta, Microsoft HoloLens, ODG’s Smart Glasses and the others in this space (there are quite a few!) are really innovating and bringing this closer to mainstream potential. While it is still likely to take two to three years to truly get this out there to the masses, the technology is clearly ready for developers to start experimenting with. The Meta 2 headset looks to be a great option with its larger FOV, high resolution and reasonable price point.

If you are interested in building for the Meta 2, pre-orders are available on the Meta homepage. For developers with the Meta 1 stashed away in their closet — we’ve also got a guide to Getting started with augmented reality and the Meta 1 headset if you’d like to get back into it after this news!

Developers out there — I’d love to hear your thoughts on the Meta 2 headset. Have you pre-ordered yours?