Content Research: The Power of User Interviews

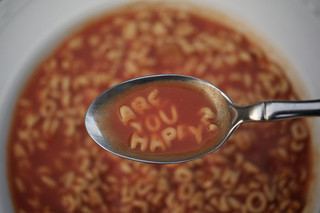

(or How to Find Out What Makes Your Users Happy)

If you’re in UX – or you have been – you may already be familiar with the idea of doing user interviews to find out about the user experience.

But using interviews to research content? How does that work?

Last time I mentioned that we’d received some unexpected survey responses through both our open-ended question and spontaneous responses to our CTA email. A lot of those responses touched on an idea that I was barely conscious of: users who were struggling with our product asked others for help with it.

Again, this is something that can’t be tracked using analytics or on-site means. The survey responses showed that individuals asked their colleagues for help with the software. But I had no idea who those colleagues were. Were they:

- the person at the next desk?

- an administrator of our product?

- anyone who was more experienced?

I also wanted a clear view of the overall picture of ways users “solved problems” they experienced with our product. I work on the support site, but we also have account managers, a support helpdesk, and in-house administrators making up the “help” puzzle.

I needed to talk to real people.

What are interviews? What do they give you?

Interviews involve speaking to people — either in person or over the phone. I have a loose set of questions which really act as openers for lines of discussion. They cover the main questions I have about what the big “software support” picture is for our product, and how our content fits into that.

What we’re getting from our interviews is qualitative, scenario-style information about the kinds of things users do when they have a problem with the software.

In-person interviews (even over the phone) give you something unique: a sense of the personality of the user, and valuable auditory feedback. If I ask a user if they’ve ever used the support site, the speed and tone of their response gives cues as to whether they use it, whether they use it often, and how they might feel about it. Do they enter with confidence or dread?

Those cues can lead me to ask extra questions, digging deeper into issues that are sensed rather than implicitly spoken. Issues that are almost always invisible in the raw data.

How do interviews work?

I drafted a rough script in an attempt to touch on the main knowledge gaps I knew I had, and spoke to a friend whose company uses our software. She put me onto a colleague, who I called.

Even though she’d told him I’d call, I didn’t call and expect to interview him. I called to break the ice and explain what I was doing in a way that was intended not to bias his expectations (I didn’t tell him I worked on the support site, for example). We made a time for a 15-minute interview a couple of days later, and at that time I called back and interviewed over the phone, taking notes as I went.

This is a pretty rough-and-ready way to get started, but as I was formulating the idea of the interview, I wasn’t really sure where things were heading. You might argue that it would be ideal to speak to users face to face, and I wouldn’t disagree.

That would deliver information about the environments in which our users use the software, and how their workplaces operate. It could tell us, for example, if this particular user has a desk-buddy to ask for help, or if they’re in an office of their own. Do they use a chat app at work? If not, how far would they have to walk to ask someone to help with a problem on our system? In that case, would they be more likely to call our helpdesk instead? The questions just keep coming.

Even so, our little phone interview technique has delivered some great information about where our content fits into the scheme of things.

Interview wins

Who’s not winning in a user interview? As the researcher, you get a very strong sense of the kinds of people actually grappling with your product. As the user, you get the sense that the brand cares about you — you might even air a few grievances in the process, and (hopefully) feel like you’re being heard.

Our interviews helped us to realise that not only is our support site not users’ first port of call when they face a problem, but the entire suite of help offered by our company (from account managers to email and phone support) aren’t either.

When they struggle with the software, users, obviously, ask someone they work with about it.

We also learned that people designated as in-house administrators of our product at client companies do field plenty of questions from users — even though often, they may not know the answers at the time. The interviews flagged an opportunity to better support those administrators to support others in their companies.

Basically, the interviews are helping us to build a much richer understanding of the realities of support within the day to day context in which our product is used.

Interview limitations

The main limitation with interviews is that they give you qualitative information. You probably don’t want to rush off on the basis of a conversation with one user and make sweeping changes to your site. So it’s important to keep what you learn from each interview in perspective.

Another limitation is time. If your interview’s going to take what users perceive as “too long”, you might struggle to find subjects to talk with.

Remember too that on-site, face-to-face interviews are likely to be more of a burden to your subjects than phone interviews. And the more difficult you make the interview, the fewer subjects you’ll get.

Interview tips and tricks

Like surveys, which we looked at last week, interviews can seem easy enough to do at first glance. You work out what you want to know, and ask the subject the questions. Right?

Well, maybe. Look at any news or current affairs program and you’ll see that there are different interview styles, and good and bad interviewers. Anyone can ask questions, but there’s an art to a good interview.

The best tip I can give is to master that art as best you can, using resources like this article from UX mastery as a starting point. Sure, it’s about usability interviews, but that doesn’t mean it’s not relevant. You’re effectively researching how users use your content, so while some of the advice may not translate directly, it should give you the impetus to being to fill gaps yourself.

The other key to interviewing is to make the subject as comfortable as possible. Being interviewed is both flattering and nerve-wracking, so the more natural you can be with the subject, and the more at ease, the more open they’re likely to be with you. Make this your focus and you could wind up getting some real gems from your interviews.

Dealing with interview data

With the techniques we’ve looked at previously, click tracking and surveys, the data has been relatively easy to deal with, as it is at least largely quantitative. But what do you do with interviews?

I see the interviews as giving me information that helps shape my understanding of the way users use the content — that is, it provides a backdrop for the click tracking and survey information.

So the first thing to do is write them up after you’ve done the interview itself. This helps me to consolidate what I gleaned in the interview, and gives me a clearer picture of what that user was all about.

A good way to use your interview writeups may well be to distill them into the equivalent of user personas, where each one has a name, a role or business, and salient points that relate to how they use your content. You may not create content specifically for single user types (although you may).

But either way, the personas will remind you of the critical elements of your content’s use case, and you can make sure you consider each of those critical elements as you design, create, and present content on your site.

What’s next?

There’s one technique we haven’t looked at yet, though it’s a little like a survey: it’s user polling. Next time, we’ll turn our attention to user feedback and ratings widgets, and see what they add to the overall picture you have of how users consume your content — and what value it delivers.

But for now, let us know if you’ve done any user interviews. What are your tips and tricks for good user interviewing? We’d love to hear them in the comments.