How to Perform Data Analysis in Python Using the OpenAI API

In this tutorial, you’ll learn how to use Python and the OpenAI API to perform data mining and analysis on your data.

Manually analyzing datasets to extract useful data, or even using simple programs to do the same, can often get complicated and time consuming. Luckily, with the OpenAI API and Python it’s possible to systematically analyze your datasets for interesting information without over-engineering your code and wasting time. This can be used as a universal solution for data analysis, eliminating the need to use different methods, libraries and APIs to analyze different types of data and data points inside a dataset.

Let’s walk through the steps of using the OpenAI API and Python to analyze your data, starting with how to set things up.

Setup

To mine and analyze data through Python using the OpenAI API, install the openai and pandas libraries:

pip3 install openai pandas

After you’ve done that, create a new folder and create an empty Python file inside your new folder.

Analyzing Text Files

For this tutorial, I thought it would be interesting to make Python analyze Nvidia’s latest earnings call.

Download the latest Nvidia earnings call transcript that I got from The Motley Fool and move it into your project folder.

Then open your empty Python file and add this code.

The code reads the Nvidia earnings transcript that you’ve downloaded and passes it to the extract_info function as the transcript variable.

The extract_info function passes the prompt and transcript as the user input, as well as temperature=0.3 and model="gpt-3.5-turbo-16k". The reason it uses the “gpt-3.5-turbo-16k” model is because it can process large texts such as this transcript. The code gets the response using the openai.ChatCompletion.create endpoint and passes the prompt and transcript variables as user input:

completions = openai.ChatCompletion.create(

model="gpt-3.5-turbo-16k",

messages=[

{"role": "user", "content": prompt+"\n\n"+text}

],

temperature=0.3,

)

The full input will look like this:

Extract the following information from the text:

Nvidia's revenue

What Nvidia did this quarter

Remarks about AI

Nvidia earnings transcript goes here

Now, if we pass the input to the openai.ChatCompletion.create endpoint, the full output will look like this:

{

"choices": [

{

"finish_reason": "stop",

"index": 0,

"message": {

"content": "Actual response",

"role": "assistant"

}

}

],

"created": 1693336390,

"id": "request-id",

"model": "gpt-3.5-turbo-16k-0613",

"object": "chat.completion",

"usage": {

"completion_tokens": 579,

"prompt_tokens": 3615,

"total_tokens": 4194

}

}

As you can see, it returns the text response as well as the token usage of the request, which can be useful if you’re tracking your expenses and optimizing your costs. But since we’re only interested in the response text, we get it by specifying the completions.choices[0].message.content response path.

If you run your code, you should get a similar output to what’s quoted below:

From the text, we can extract the following information:

- Nvidia’s revenue: In the second quarter of fiscal 2024, Nvidia reported record Q2 revenue of 13.51 billion, which was up 88% sequentially and up 101% year on year.

- What Nvidia did this quarter: Nvidia experienced exceptional growth in various areas. They saw record revenue in their data center segment, which was up 141% sequentially and up 171% year on year. They also saw growth in their gaming segment, with revenue up 11% sequentially and 22% year on year. Additionally, their professional visualization segment saw revenue growth of 28% sequentially. They also announced partnerships and collaborations with companies like Snowflake, ServiceNow, Accenture, Hugging Face, VMware, and SoftBank.

- Remarks about AI: Nvidia highlighted the strong demand for their AI platforms and accelerated computing solutions. They mentioned the deployment of their HGX systems by major cloud service providers and consumer internet companies. They also discussed the applications of generative AI in various industries, such as marketing, media, and entertainment. Nvidia emphasized the potential of generative AI to create new market opportunities and boost productivity in different sectors.

As you can see, the code extracts the info that’s specified in the prompt (Nvidia’s revenue, what Nvidia did this quarter, and remarks about AI) and prints it.

Analyzing CSV Files

Analyzing earnings-call transcripts and text files is cool, but to systematically analyze large volumes of data, you’ll need to work with CSV files.

As a working example, download this Medium articles CSV dataset and paste it into your project file.

If you take a look into the CSV file, you’ll see that it has the “author”, “claps”, “reading_time”, “link”, “title” and “text” columns. For analyzing the medium articles with OpenAI, you only need the “title” and “text” columns.

Create a new Python file in your project folder and paste this code.

This code is a bit different from the code we used to analyze a text file. It reads CSV rows one by one, extracts the specified pieces of information, and adds them into new columns.

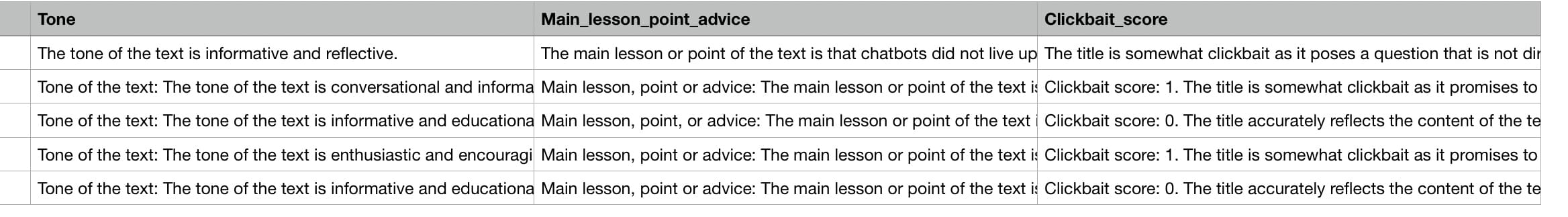

For this tutorial, I’ve picked a CSV dataset of Medium articles, which I got from HSANKESARA on Kaggle. This CSV analysis code will find the overall tone and the main lesson/point of each article, using the “title” and “article” columns of the CSV file. Since I always come across clickbaity articles on Medium, I also thought it would be interesting to tell it to find how “clickbaity” each article is by giving each one a “clickbait score” from 0 to 3, where 0 is no clickbait and 3 is extreme clickbait.

Before I explain the code, analyzing the entire CSV file would take too long and cost too many API credits, so for this tutorial, I’ve made the code analyze only the first five articles using df = df[:5].

You may be confused about the following part of the code, so let me explain:

for di in range(len(df)):

title = titles[di]

abstract = articles[di]

additional_params = extract_info('Title: '+str(title) + '\n\n' + 'Text: ' + str(abstract))

try:

result = additional_params.split("\n\n")

except:

result = {}

This code iterates through all the articles (rows) in the CSV file and, with each iteration, gets the title and body of each article and passes it to the extract_info function, which we saw earlier. It then turns the response of the extract_info function into a list to separate the different pieces of info using this code:

try:

result = additional_params.split("\n\n")

except:

result = {}

Next, it adds each piece of info into a list, and if there’s an error (if there’s no value), it adds “No result” into the list:

try:

apa1.append(result[0])

except Exception as e:

apa1.append('No result')

try:

apa2.append(result[1])

except Exception as e:

apa2.append('No result')

try:

apa3.append(result[2])

except Exception as e:

apa3.append('No result')

Finally, after the for loop is finished, the lists that contain the extracted info are inserted into new columns in the CSV file:

df = df.assign(Tone=apa1)

df = df.assign(Main_lesson_or_point=apa2)

df = df.assign(Clickbait_score=apa3)

As you can see, it adds the lists into new CSV columns that are name “Tone”, “Main_lesson_or_point” and “Clickbait_score”.

It then appends them to the CSV file with index=False:

df.to_csv("data.csv", index=False)

The reason why you have to specify index=False is to avoid creating new index columns every time you append new columns to the CSV file.

Now, if you run your Python file, wait for it to finish and check our CSV file in a CSV file viewer, you’ll see the new columns, as pictured below.

If you run your code multiple times, you’ll notice that the generated answers differ slightly. This is because the code uses temperature=0.3 to add a bit of creativity into its answers, which is useful for subjective topics like clickbait.

Working with Multiple Files

If you want to automatically analyze multiple files, you need to first put them inside a folder and make sure the folder only contains the files you’re interested in, to prevent your Python code from reading irrelevant files. Then, install the glob library using pip3 install glob and import it in your Python file using import glob.

In your Python file, use this code to get a list of all the files in your data folder:

data_files = glob.glob("data_folder/*")

Then put the code that does the analysis in a for loop:

for i in range(len(data_files)):

Inside the for loop, read the contents of each file like this for text files:

f = open(f"data_folder/{data_files[i]}", "r")

txt_data = f.read()

Also like this for CSV files:

df = pd.read_csv(f"data_folder/{data_files[i]}")

In addition, make sure to save the output of each file analysis into a separate file using something like this:

df.to_csv(f"output_folder/data{i}.csv", index=False)

Conclusion

Remember to experiment with your temperature parameter and adjust it for your use case. If you want the AI to make more creative answers, increase your temperature, and if you want it to make more factual answers, make sure to lower it.

The combination of OpenAI and Python data analysis has many applications apart from article and earnings call transcript analysis. Examples include news analysis, book analysis, customer review analysis, and much more! That said, when testing your Python code on big datasets, make sure to only test it on a small part of the full dataset to save API credits and time.